Developer Guide

Amazon Keyspaces (for Apache Cassandra)

Copyright © 2024 Amazon Web Services, Inc. and/or its affiliates. All rights reserved.

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Amazon Keyspaces (for Apache Cassandra): Developer Guide

Copyright © 2024 Amazon Web Services, Inc. and/or its affiliates. All rights reserved.

Amazon's trademarks and trade dress may not be used in connection with any product or service

that is not Amazon's, in any manner that is likely to cause confusion among customers, or in any

manner that disparages or discredits Amazon. All other trademarks not owned by Amazon are

the property of their respective owners, who may or may not be affiliated with, connected to, or

sponsored by Amazon.

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Table of Contents

What is Amazon Keyspaces? ........................................................................................................... 1

How it works ................................................................................................................................................. 1

High-level architecture ........................................................................................................................... 2

Cassandra data model ............................................................................................................................ 4

Accessing Amazon Keyspaces ............................................................................................................... 5

Use cases ........................................................................................................................................................ 6

What is CQL? ................................................................................................................................................. 7

Compare Amazon Keyspaces with Cassandra ................................................................................ 9

Functional differences with Apache Cassandra .................................................................................... 10

Apache Cassandra APIs, operations, and data types ..................................................................... 11

Asynchronous creation and deletion of keyspaces and tables .................................................... 11

Authentication and authorization ..................................................................................................... 11

Batch ........................................................................................................................................................ 11

Cluster configuration ............................................................................................................................ 11

Connections ............................................................................................................................................ 11

IN keyword ............................................................................................................................................ 12

CQL query throughput tuning ........................................................................................................... 12

FROZEN collections ............................................................................................................................... 13

Lightweight transactions ..................................................................................................................... 13

Load balancing ...................................................................................................................................... 14

Pagination ............................................................................................................................................... 14

Partitioners ............................................................................................................................................. 14

Prepared statements ............................................................................................................................ 14

Range delete .......................................................................................................................................... 15

System tables ........................................................................................................................................ 15

Timestamps ............................................................................................................................................ 15

Supported Cassandra APIs, operations, functions, and data types .................................................. 16

Cassandra API support ......................................................................................................................... 16

Cassandra control plane API support ............................................................................................... 18

Cassandra data plane API support .................................................................................................... 18

Cassandra function support ............................................................................................................... 19

Cassandra data type support ............................................................................................................. 19

Supported Cassandra consistency levels ............................................................................................... 21

Write consistency levels ...................................................................................................................... 21

iii

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Read consistency levels ....................................................................................................................... 22

Unsupported consistency levels ........................................................................................................ 23

Migrating to Amazon Keyspaces .................................................................................................. 24

Migrating from Cassandra ........................................................................................................................ 25

Compatibility .......................................................................................................................................... 26

Estimate pricing .................................................................................................................................... 26

Migration strategy ................................................................................................................................ 35

Online migration ................................................................................................................................... 36

Offline migration .................................................................................................................................. 47

Hybrid migration ................................................................................................................................... 49

Migration tools ........................................................................................................................................... 52

Loading data using cqlsh .................................................................................................................... 54

Loading data using DSBulk ................................................................................................................. 66

Accessing Amazon Keyspaces ....................................................................................................... 79

Setting up AWS Identity and Access Management ............................................................................. 79

Sign up for an AWS account .............................................................................................................. 79

Create a user with administrative access ......................................................................................... 79

Setting up Amazon Keyspaces ................................................................................................................ 81

Using the console ....................................................................................................................................... 82

Using AWS CloudShell .............................................................................................................................. 82

Obtaining IAM permissions for AWS CloudShell ............................................................................ 83

Interacting with Amazon Keyspaces using AWS CloudShell ........................................................ 84

Create programmatic access credentials ............................................................................................... 85

Create service-specific credentials .................................................................................................... 86

Create IAM credentials for AWS authentication ............................................................................. 88

Service endpoints ....................................................................................................................................... 96

Ports and protocols .............................................................................................................................. 96

Global endpoints ................................................................................................................................... 97

AWS GovCloud (US) Region FIPS endpoints ................................................................................... 99

China Regions endpoints .................................................................................................................. 100

Using cqlsh ............................................................................................................................................. 100

Using the cqlsh-expansion ......................................................................................................... 101

How to manually configure cqlsh connections for TLS ........................................................... 106

Using the AWS CLI .................................................................................................................................. 107

Downloading and Configuring the AWS CLI ................................................................................. 108

Using the AWS CLI with Amazon Keyspaces ................................................................................. 108

iv

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Using the API ............................................................................................................................................ 112

Using a Cassandra client driver ............................................................................................................ 112

Using a Cassandra Java client driver .............................................................................................. 113

Using a Cassandra Python client driver ......................................................................................... 126

Using a Cassandra Node.js client driver ........................................................................................ 129

Using a Cassandra .NET Core client driver .................................................................................... 133

Using a Cassandra Go client driver ................................................................................................. 135

Using a Cassandra Perl client driver ............................................................................................... 140

Connection tutorials ................................................................................................................................ 142

Connecting with VPC endpoints ..................................................................................................... 142

Connecting with Apache Spark ....................................................................................................... 161

Connecting from Amazon EKS ........................................................................................................ 174

Configure cross-account access ............................................................................................................. 194

Configure cross-account access in a shared VPC ......................................................................... 195

Configure cross-account access without a shared VPC ............................................................... 198

Getting started ............................................................................................................................ 200

Prerequisites .............................................................................................................................................. 201

Create a keyspace .................................................................................................................................... 201

Check keyspace creation status ............................................................................................................ 205

Create a table ........................................................................................................................................... 205

Check table creation status ................................................................................................................... 214

CRUD operations ...................................................................................................................................... 214

Create .................................................................................................................................................... 215

Read ....................................................................................................................................................... 219

Update .................................................................................................................................................. 223

Delete .................................................................................................................................................... 224

Delete a table ........................................................................................................................................... 226

Delete a keyspace .................................................................................................................................... 229

Managing serverless resources ................................................................................................... 232

Estimate row size ..................................................................................................................................... 233

Estimate capacity consumption ............................................................................................................ 236

Estimate the capacity consumption of range queries ................................................................. 237

Estimate the read capacity consumption of limit queries ......................................................... 238

Estimate the read capacity consumption of table scans ............................................................ 239

Estimate capacity consumption of LWT ........................................................................................ 239

Estimate capacity consumption of static columns ...................................................................... 240

v

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Estimate capacity for a multi-Region table .................................................................................. 244

Estimate capacity consumption with CloudWatch ...................................................................... 245

Configure read/write capacity modes ................................................................................................. 246

Configure on-demand capacity mode ............................................................................................ 246

Configure provisioned throughput capacity mode ...................................................................... 249

View the capacity mode of a table ................................................................................................ 250

Change capacity mode ...................................................................................................................... 251

Manage throughput capacity with auto scaling ................................................................................ 252

How Amazon Keyspaces automatic scaling works ....................................................................... 253

How auto scaling works for multi-Region tables ........................................................................ 254

Usage notes ......................................................................................................................................... 255

Configure and update auto scaling policies .................................................................................. 256

Use burst capacity ................................................................................................................................... 269

Working with Amazon Keyspaces features ................................................................................ 270

System keyspaces .................................................................................................................................... 271

system ................................................................................................................................................. 272

system_schema ................................................................................................................................. 273

system_schema_mcs ....................................................................................................................... 274

system_multiregion_info ......................................................................................................... 276

Multi-Region Replication ........................................................................................................................ 277

Benefits ................................................................................................................................................. 278

Capacity modes and pricing ............................................................................................................. 279

How it works ....................................................................................................................................... 280

Usage notes ......................................................................................................................................... 283

Configure Multi-Region Replication ................................................................................................ 285

Backup and restore with point-in-time recovery .............................................................................. 308

How it works ....................................................................................................................................... 309

Use point-in-time recovery ............................................................................................................... 313

Expire data with Time to Live ............................................................................................................... 326

Integration with AWS services ......................................................................................................... 327

Create table with default TTL value .............................................................................................. 327

Update table default TTL value ...................................................................................................... 331

Create table with custom TTL ......................................................................................................... 335

Update table custom TTL ................................................................................................................. 337

Use INSERT to set custom TTL for new rows .............................................................................. 339

Use UPDATE to set custom TTL for rows and columns .............................................................. 340

vi

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Client-side timestamps ........................................................................................................................... 341

Integration with AWS services ......................................................................................................... 343

Create table with client-side timestamps ...................................................................................... 343

Configure client-side timestamps ................................................................................................... 346

Use client-side timestamps in queries ........................................................................................... 349

Working with CQL queries ..................................................................................................................... 350

Use IN SELECT ................................................................................................................................... 350

Order results ........................................................................................................................................ 354

Paginate results .................................................................................................................................. 355

Working with partitioners ...................................................................................................................... 356

Change the partitioner ...................................................................................................................... 357

Working with AWS SDKs ........................................................................................................................ 358

Working with tags ................................................................................................................................... 359

Tagging restrictions ............................................................................................................................ 360

Tag keyspaces and tables ................................................................................................................. 361

Create cost allocation reports .......................................................................................................... 371

Create AWS CloudFormation resources ............................................................................................... 372

Amazon Keyspaces and AWS CloudFormation templates .......................................................... 372

Learn more about AWS CloudFormation ....................................................................................... 372

NoSQL Workbench ................................................................................................................................... 373

Download ............................................................................................................................................. 374

Getting started .................................................................................................................................... 374

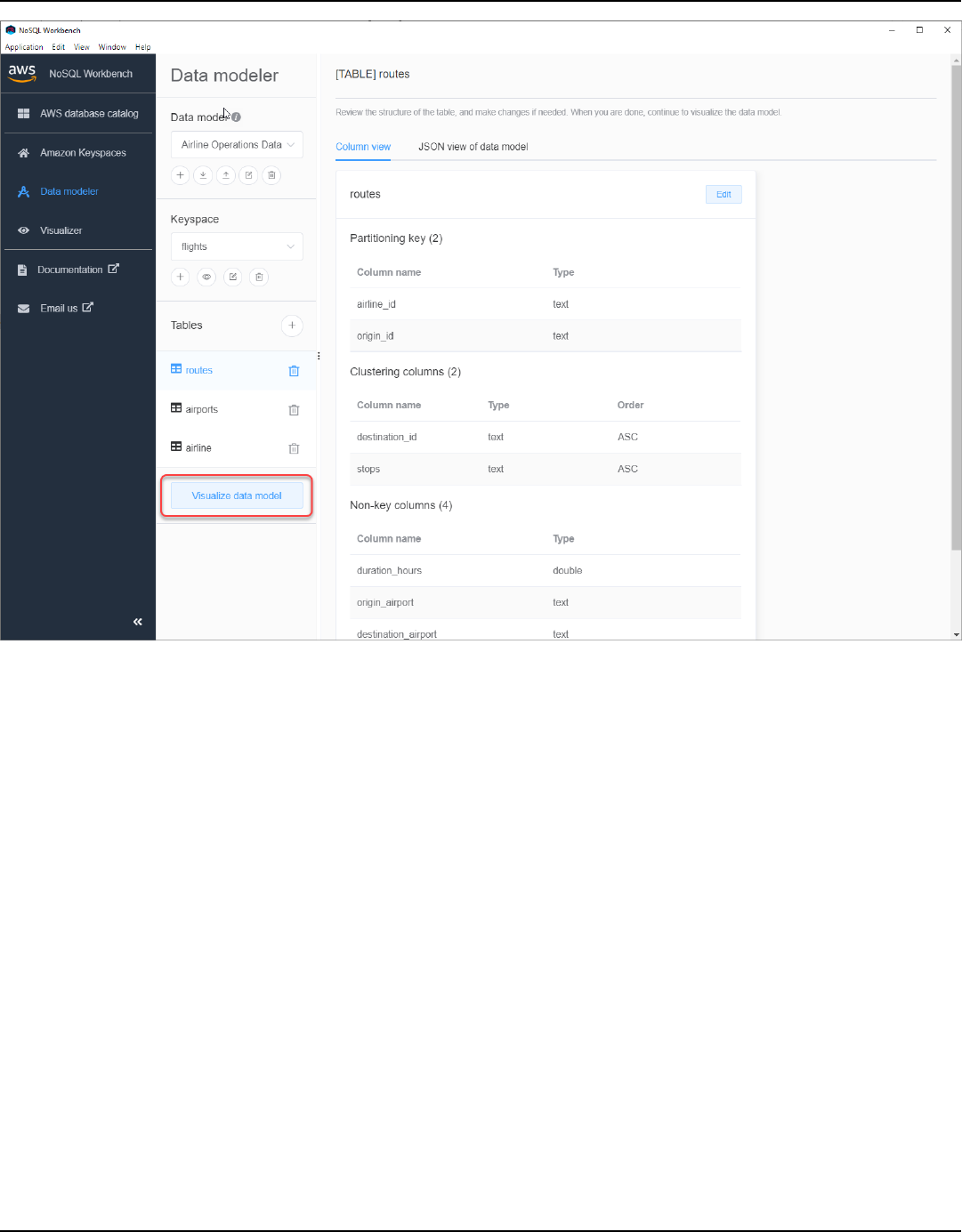

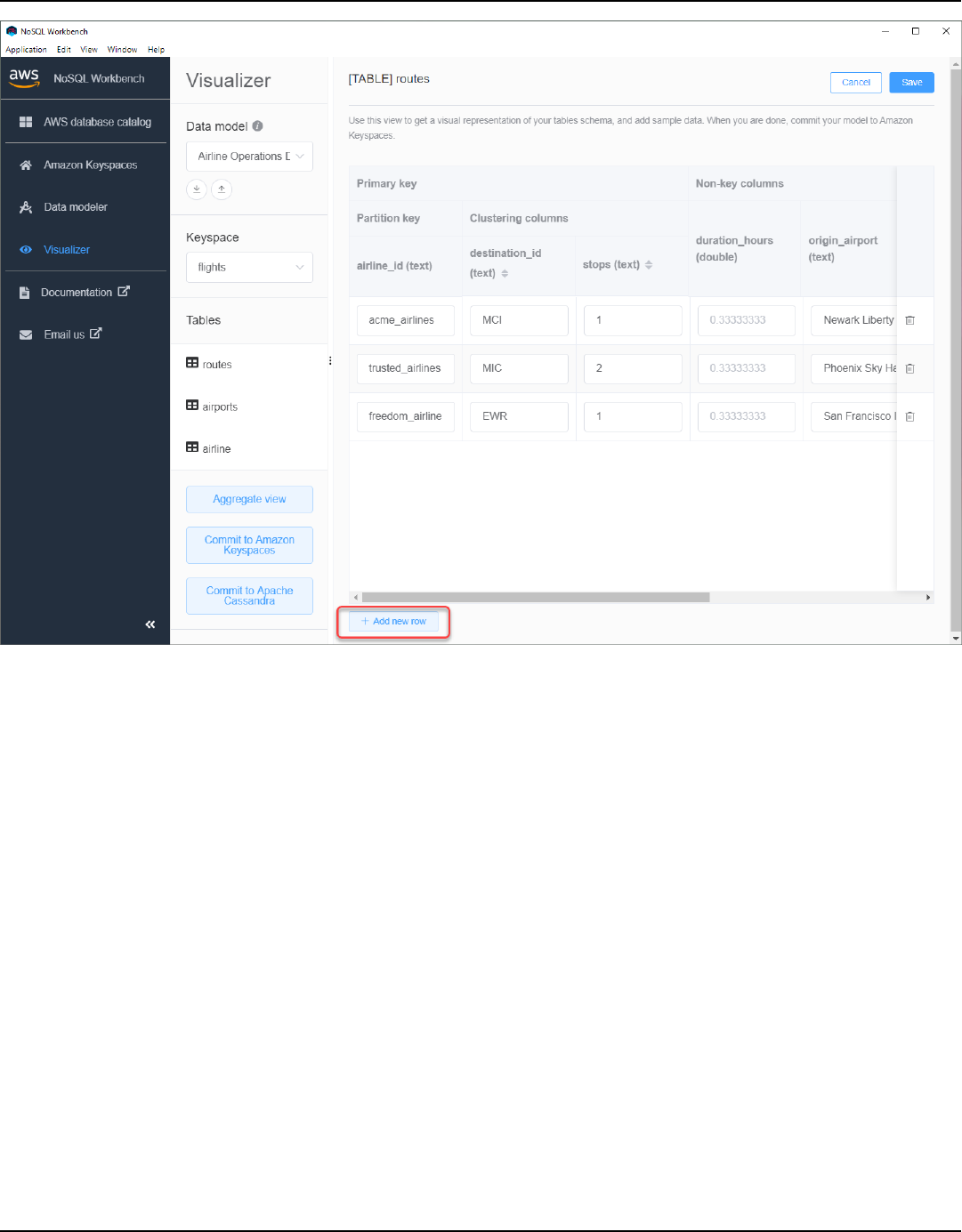

Visualize a data model ...................................................................................................................... 376

Create a data model .......................................................................................................................... 380

Edit a data model ............................................................................................................................... 382

Commit a data model ....................................................................................................................... 384

Sample data models .......................................................................................................................... 395

Release history .................................................................................................................................... 396

Code examples ............................................................................................................................. 397

Basics .......................................................................................................................................................... 402

Hello Amazon Keyspaces .................................................................................................................. 403

Learn the basics .................................................................................................................................. 408

Actions .................................................................................................................................................. 470

Libraries and tools ....................................................................................................................... 515

Libraries and examples ........................................................................................................................... 515

Amazon Keyspaces (for Apache Cassandra) developer toolkit .................................................. 515

vii

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Amazon Keyspaces (for Apache Cassandra) examples ................................................................ 515

AWS Signature Version 4 (SigV4) authentication plugins .......................................................... 515

Highlighted sample and developer tool repos .................................................................................. 516

Amazon Keyspaces Protocol Buffers .............................................................................................. 516

AWS CloudFormation template to create Amazon CloudWatch dashboard for Amazon

Keyspaces (for Apache Cassandra) metrics ................................................................................... 516

Using Amazon Keyspaces (for Apache Cassandra) with AWS Lambda ..................................... 516

Using Amazon Keyspaces (for Apache Cassandra) with Spring ................................................. 517

Using Amazon Keyspaces (for Apache Cassandra) with Scala ................................................... 517

Using Amazon Keyspaces (for Apache Cassandra) with AWS Glue ........................................... 517

Amazon Keyspaces (for Apache Cassandra) Cassandra query language (CQL) to AWS

CloudFormation converter ................................................................................................................ 517

Amazon Keyspaces (for Apache Cassandra) helpers for Apache Cassandra driver for Java . 518

Amazon Keyspaces (for Apache Cassandra) snappy compression demo ................................. 518

Amazon Keyspaces (for Apache Cassandra) and Amazon S3 codec demo .............................. 518

Best practices ............................................................................................................................... 519

NoSQL design ........................................................................................................................................... 520

NoSQL vs. RDBMS .............................................................................................................................. 521

Two key concepts ............................................................................................................................... 521

General approach ............................................................................................................................... 522

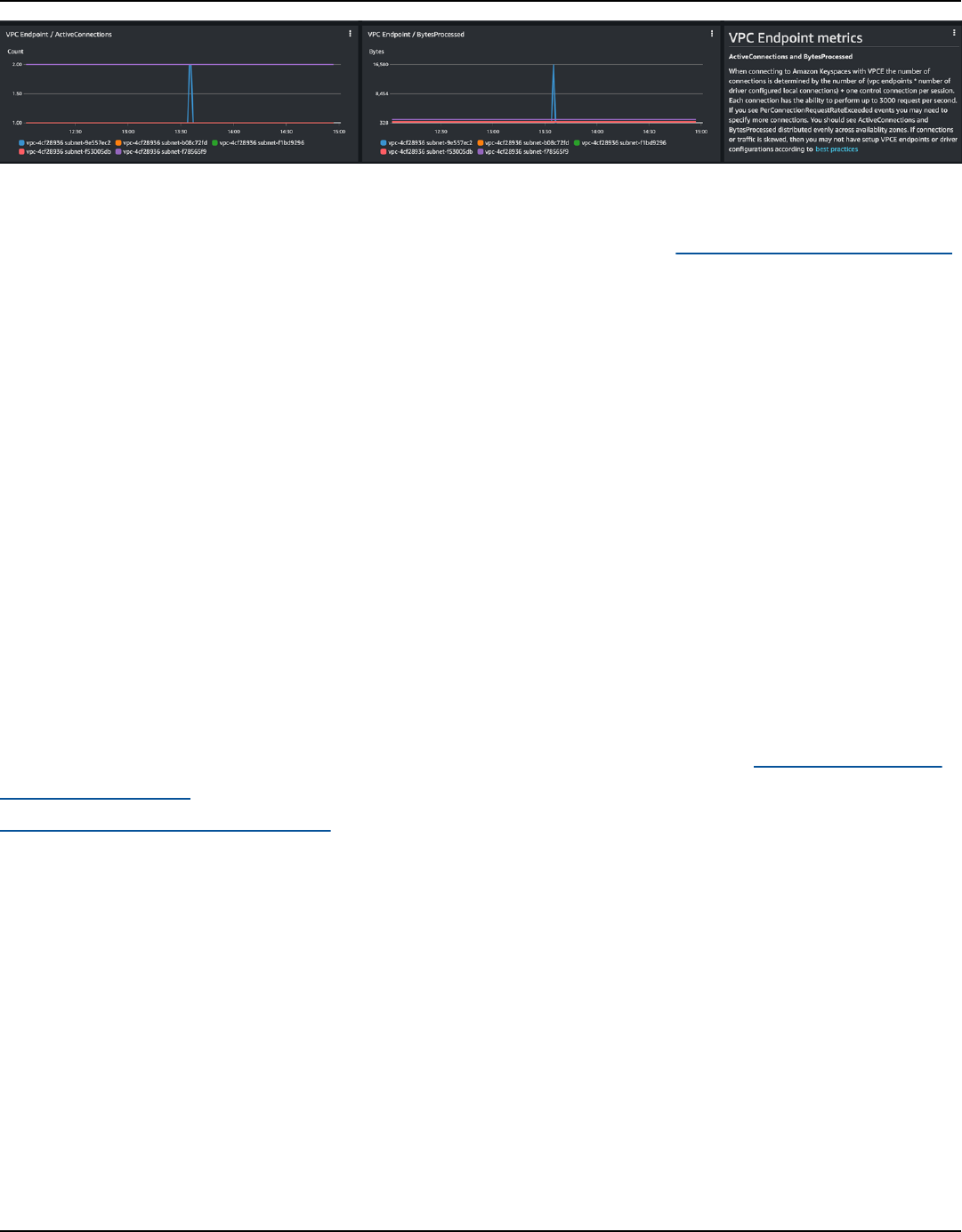

Connections ............................................................................................................................................... 523

How they work .................................................................................................................................... 523

How to configure connections ......................................................................................................... 524

VPC endpoint connections ............................................................................................................... 526

How to monitor connections ........................................................................................................... 527

How to handle connection errors ................................................................................................... 528

Data modeling .......................................................................................................................................... 528

Partition key design ........................................................................................................................... 529

Cost optimization ..................................................................................................................................... 531

Evaluate your costs at the table level ............................................................................................ 532

Evaluate your table's capacity mode .............................................................................................. 534

Evaluate your table's Application Auto Scaling settings ............................................................ 538

Identify your unused resources ....................................................................................................... 545

Evaluate your table usage patterns ................................................................................................ 550

Evaluate your provisioned capacity for right-sized provisioning .............................................. 551

Troubleshooting ........................................................................................................................... 561

viii

Amazon Keyspaces (for Apache Cassandra) Developer Guide

General errors ........................................................................................................................................... 562

General errors ...................................................................................................................................... 562

Connection errors .................................................................................................................................... 564

Errors connecting to an Amazon Keyspaces endpoint ................................................................ 564

Capacity management errors ................................................................................................................ 576

Serverless capacity errors ................................................................................................................. 576

Data definition language errors ............................................................................................................ 581

Data definition language errors ...................................................................................................... 581

Monitoring Amazon Keyspaces ................................................................................................... 586

Monitoring with CloudWatch ................................................................................................................ 587

Using metrics ....................................................................................................................................... 588

Metrics and dimensions .................................................................................................................... 589

Creating alarms ................................................................................................................................... 609

Logging with CloudTrail ......................................................................................................................... 610

Configuring log file entries in CloudTrail ...................................................................................... 610

DDL information in CloudTrail ......................................................................................................... 611

DML information in CloudTrail ........................................................................................................ 612

Understanding log file entries ......................................................................................................... 613

Security ........................................................................................................................................ 624

Data protection ........................................................................................................................................ 625

Encryption at rest ............................................................................................................................... 626

Encryption in transit .......................................................................................................................... 646

Internetwork traffic privacy .............................................................................................................. 646

AWS Identity and Access Management ............................................................................................... 648

Audience ............................................................................................................................................... 648

Authenticating with identities ......................................................................................................... 649

Managing access using policies ....................................................................................................... 652

How Amazon Keyspaces works with IAM ...................................................................................... 654

Identity-based policy examples ....................................................................................................... 659

AWS managed policies ...................................................................................................................... 666

Troubleshooting .................................................................................................................................. 673

Using service-linked roles ................................................................................................................. 676

Compliance validation ............................................................................................................................ 683

Resilience ................................................................................................................................................... 685

Infrastructure security ............................................................................................................................. 685

Using interface VPC endpoints ........................................................................................................ 686

ix

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Configuration and vulnerability analysis for Amazon Keyspaces ................................................... 693

Security best practices ............................................................................................................................ 693

Preventative security best practices ............................................................................................... 693

Detective security best practices ..................................................................................................... 695

CQL language reference .............................................................................................................. 697

Language elements ................................................................................................................................. 698

Identifiers ............................................................................................................................................. 698

Constants .............................................................................................................................................. 698

Terms ..................................................................................................................................................... 699

Data types ............................................................................................................................................ 699

JSON encoding of Amazon Keyspaces data types ....................................................................... 703

DDL statements ........................................................................................................................................ 706

Keyspaces ............................................................................................................................................. 706

Tables .................................................................................................................................................... 709

DML statements ....................................................................................................................................... 721

SELECT .................................................................................................................................................. 722

INSERT ................................................................................................................................................... 724

UPDATE ................................................................................................................................................. 726

DELETE .................................................................................................................................................. 728

Built-in functions ..................................................................................................................................... 728

Scalar functions .................................................................................................................................. 729

Quotas .......................................................................................................................................... 731

Amazon Keyspaces service quotas ....................................................................................................... 731

Increasing or decreasing throughput (for provisioned tables) ........................................................ 736

Increasing provisioned throughput ................................................................................................. 736

Decreasing provisioned throughput ............................................................................................... 736

Amazon Keyspaces encryption at rest ................................................................................................. 737

Document history ........................................................................................................................ 738

x

Amazon Keyspaces (for Apache Cassandra) Developer Guide

What is Amazon Keyspaces (for Apache Cassandra)?

Amazon Keyspaces (for Apache Cassandra) is a scalable, highly available, and managed Apache

Cassandra–compatible database service. With Amazon Keyspaces, you don’t have to provision,

patch, or manage servers, and you don’t have to install, maintain, or operate software.

Amazon Keyspaces is serverless, so you pay for only the resources that you use, and the service

automatically scales tables up and down in response to application traffic. You can build

applications that serve thousands of requests per second with virtually unlimited throughput and

storage.

Note

Apache Cassandra is an open-source, wide-column datastore that is designed to handle

large amounts of data. For more information, see Apache Cassandra.

Amazon Keyspaces makes it easy to migrate, run, and scale Cassandra workloads in the AWS Cloud.

With just a few clicks on the AWS Management Console or a few lines of code, you can create

keyspaces and tables in Amazon Keyspaces, without deploying any infrastructure or installing

software.

With Amazon Keyspaces, you can run your existing Cassandra workloads on AWS using the same

Cassandra application code and developer tools that you use today.

For a list of available AWS Regions and endpoints, see Service endpoints for Amazon Keyspaces.

We recommend that you start by reading the following sections:

Topics

• Amazon Keyspaces: How it works

• Amazon Keyspaces use cases

• What is Cassandra Query Language (CQL)?

Amazon Keyspaces: How it works

Amazon Keyspaces removes the administrative overhead of managing Cassandra. To understand

why, it's helpful to begin with Cassandra architecture and then compare it to Amazon Keyspaces.

How it works 1

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Topics

• High-level architecture: Apache Cassandra vs. Amazon Keyspaces

• Cassandra data model

• Accessing Amazon Keyspaces from an application

High-level architecture: Apache Cassandra vs. Amazon Keyspaces

Traditional Apache Cassandra is deployed in a cluster made up of one or more nodes. You are

responsible for managing each node and adding and removing nodes as your cluster scales.

A client program accesses Cassandra by connecting to one of the nodes and issuing Cassandra

Query Language (CQL) statements. CQL is similar to SQL, the popular language used in relational

databases. Even though Cassandra is not a relational database, CQL provides a familiar interface

for querying and manipulating data in Cassandra.

The following diagram shows a simple Apache Cassandra cluster, consisting of four nodes.

A production Cassandra deployment might consist of hundreds of nodes, running on hundreds

of physical computers across one or more physical data centers. This can cause an operational

High-level architecture 2

Amazon Keyspaces (for Apache Cassandra) Developer Guide

burden for application developers who need to provision, patch, and manage servers in addition to

installing, maintaining, and operating software.

With Amazon Keyspaces (for Apache Cassandra), you don’t need to provision, patch, or manage

servers, so you can focus on building better applications. Amazon Keyspaces offers two throughput

capacity modes for reads and writes: on-demand and provisioned. You can choose your table’s

throughput capacity mode to optimize the price of reads and writes based on the predictability and

variability of your workload.

With on-demand mode, you pay for only the reads and writes that your application actually

performs. You do not need to specify your table’s throughput capacity in advance. Amazon

Keyspaces accommodates your application traffic almost instantly as it ramps up or down, making

it a good option for applications with unpredictable traffic.

Provisioned capacity mode helps you optimize the price of throughput if you have predictable

application traffic and can forecast your table’s capacity requirements in advance. With provisioned

capacity mode, you specify the number of reads and writes per second that you expect your

application to perform. You can increase and decrease the provisioned capacity for your table

automatically by enabling automatic scaling.

You can change the capacity mode of your table once per day as you learn more about your

workload’s traffic patterns, or if you expect to have a large burst in traffic, such as from a major

event that you anticipate will drive a lot of table traffic. For more information about read and write

capacity provisioning, see the section called “Configure read/write capacity modes”.

Amazon Keyspaces (for Apache Cassandra) stores three copies of your data in multiple Availability

Zones for durability and high availability. In addition, you benefit from a data center and network

architecture that is built to meet the requirements of the most security-sensitive organizations.

Encryption at rest is automatically enabled when you create a new Amazon Keyspaces table and all

client connections require Transport Layer Security (TLS). Additional AWS security features include

monitoring, AWS Identity and Access Management, and virtual private cloud (VPC) endpoints. For

an overview of all available security features, see Security.

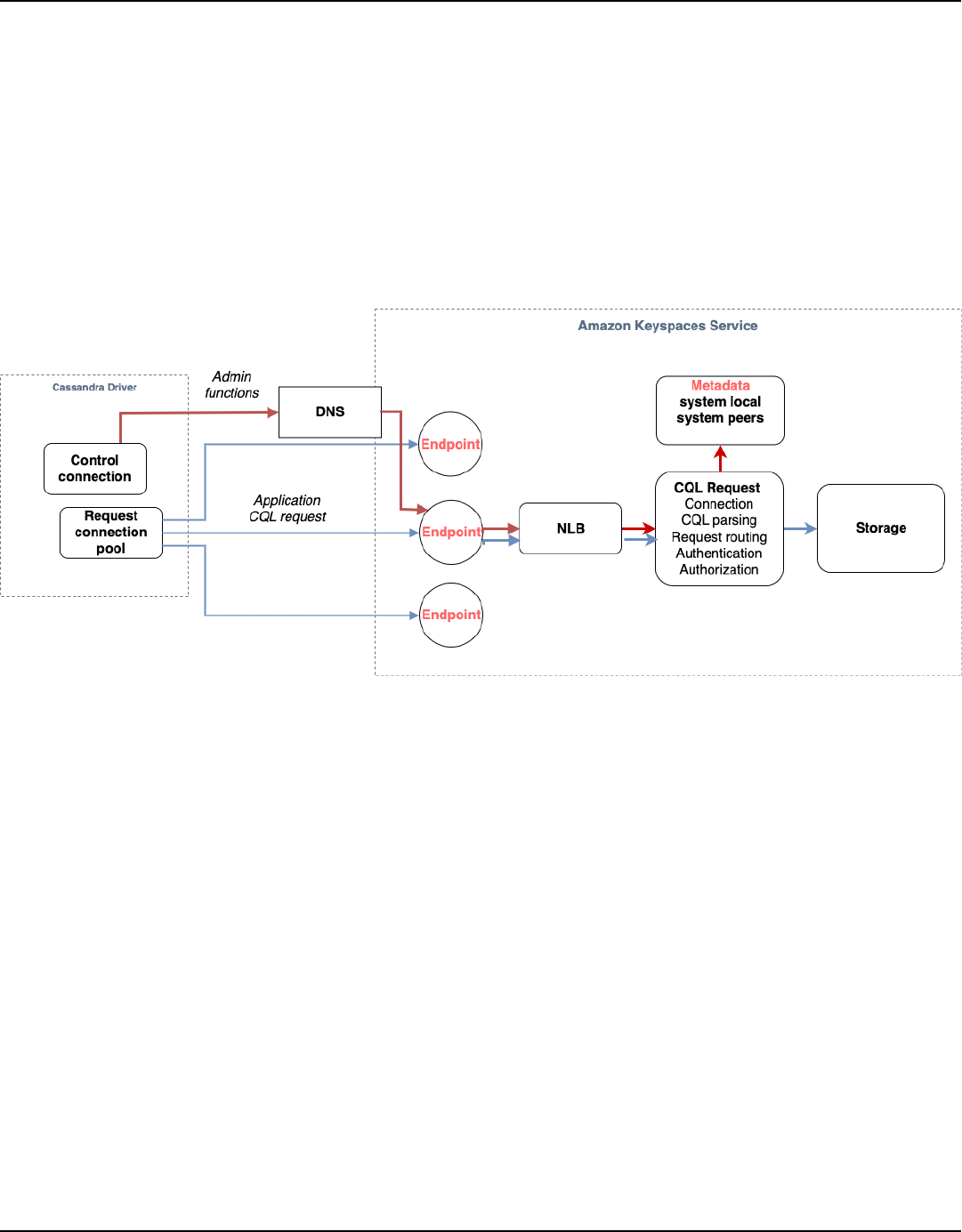

The following diagram shows the architecture of Amazon Keyspaces.

High-level architecture 3

Amazon Keyspaces (for Apache Cassandra) Developer Guide

A client program accesses Amazon Keyspaces by connecting to a predetermined endpoint

(hostname and port number) and issuing CQL statements. For a list of available endpoints, see the

section called “Service endpoints”.

Cassandra data model

How you model your data for your business case is critical to achieving optimal performance from

Amazon Keyspaces. A poor data model can significantly degrade performance.

Even though CQL looks similar to SQL, the backends of Cassandra and relational databases are

very different and must be approached differently. The following are some of the more significant

issues to consider:

Storage

You can visualize your Cassandra data in tables, with each row representing a record and each

column a field within that record.

Table design: Query first

There are no JOINs in CQL. Therefore, you should design your tables with the shape of your

data and how you need to access it for your business use cases. This might result in de-

normalization with duplicated data. You should design each of your tables specifically for a

particular access pattern.

Cassandra data model 4

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Partitions

Your data is stored in partitions on disk. The number of partitions your data is stored in and

how it is distributed across the partitions is determined by your partition key. How you define

your partition key can have a significant impact upon the performance of your queries. For best

practices, see the section called “Partition key design”.

Primary key

In Cassandra, data is stored as a key-value pair. Every Cassandra table must have a primary key,

which is the unique key to each row in the table. The primary key is the composite of a required

partition key and optional clustering columns. The data that comprises the primary key must be

unique across all records in a table.

• Partition key – The partition key portion of the primary key is required and determines which

partition of your cluster the data is stored in. The partition key can be a single column, or it

can be a compound value composed of two or more columns. You would use a compound

partition key if a single column partition key would result in a single partition or a very few

partitions having most of the data and thus bearing the majority of the disk I/O operations.

• Clustering column – The optional clustering column portion of your primary key determines

how the data is clustered and sorted within each partition. If you include a clustering column

in your primary key, the clustering column can have one or more columns. If there are

multiple columns in the clustering column, the sorting order is determined by the order that

the columns are listed in the clustering column, from left to right.

For more information about NoSQL design and Amazon Keyspaces, see the section called “NoSQL

design”. For more information about Amazon Keyspaces and data modeling, see the section called

“Data modeling”.

Accessing Amazon Keyspaces from an application

Amazon Keyspaces (for Apache Cassandra) implements the Apache Cassandra Query Language

(CQL) API, so you can use CQL and Cassandra drivers that you already use. Updating your

application is as easy as updating your Cassandra driver or cqlsh configuration to point to the

Amazon Keyspaces service endpoint. For more information about the required credentials, see the

section called “Create IAM credentials for AWS authentication”.

Accessing Amazon Keyspaces 5

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Note

To help you get started, you can find end-to-end code samples of connecting to Amazon

Keyspaces by using various Cassandra client drivers in the Amazon Keyspaces code example

repository on GitHub.

Consider the following Python program, which connects to a Cassandra cluster and queries a table.

from cassandra.cluster import Cluster

#TLS/SSL configuration goes here

ksp = 'MyKeyspace'

tbl = 'WeatherData'

cluster = Cluster(['NNN.NNN.NNN.NNN'], port=NNNN)

session = cluster.connect(ksp)

session.execute('USE ' + ksp)

rows = session.execute('SELECT * FROM ' + tbl)

for row in rows:

print(row)

To run the same program against Amazon Keyspaces, you need to:

• Add the cluster endpoint and port: For example, the host can be replaced with a service

endpoint, such as cassandra.us-east-2.amazonaws.com and the port number with: 9142.

• Add the TLS/SSL configuration: For more information on adding the TLS/SSL configuration to

connect to Amazon Keyspaces by using a Cassandra client Python driver, see Using a Cassandra

Python client driver to access Amazon Keyspaces programmatically.

Amazon Keyspaces use cases

The following are just some of the ways in which you can use Amazon Keyspaces:

• Build applications that require low latency – Process data at high speeds for applications

that require single-digit-millisecond latency, such as industrial equipment maintenance, trade

monitoring, fleet management, and route optimization.

Use cases 6

Amazon Keyspaces (for Apache Cassandra) Developer Guide

• Build applications using open-source technologies – Build applications on AWS using open-

source Cassandra APIs and drivers that are available for a wide range of programming languages,

such as Java, Python, Ruby, Microsoft .NET, Node.js, PHP, C++, Perl, and Go. For code examples,

see Libraries and tools.

• Move your Cassandra workloads to the cloud – Managing Cassandra tables yourself is time-

consuming and expensive. With Amazon Keyspaces, you can set up, secure, and scale Cassandra

tables in the AWS Cloud without managing infrastructure. For more information, see Managing

serverless resources.

What is Cassandra Query Language (CQL)?

Cassandra Query Language (CQL) is the primary language for communicating with Apache

Cassandra. Amazon Keyspaces (for Apache Cassandra) is compatible with the CQL 3.x API

(backward-compatible with version 2.x).

In CQL, data is stored in tables, columns, and rows. In this sense CQL is similar to Structured Query

Language (SQL). These are the key concepts in CQL.

• CQL elements – The fundamental elements of CQL are identifiers, constants, terms, and data

types.

• Data Definition Language (DDL) – DDL statements are used to manage data structures like

keyspaces and tables, which are AWS resources in Amazon Keyspaces. DDL statements are

control plane operations in AWS.

• Data Manipulation Language (DML) – DML statements are used to manage data within tables.

DML statements are used for selecting, inserting, updating, and deleting data. These are data

plane operations in AWS.

• Built-in functions – Amazon Keyspaces supports a variety of built-in scalar functions that you

can use in CQL statements.

For more information about CQL, see CQL language reference for Amazon Keyspaces (for Apache

Cassandra). For functional differences with Apache Cassandra, see the section called “Functional

differences with Apache Cassandra”.

To run CQL queries, you can do one of the following:

• Use the CQL editor in the AWS Management Console.

What is CQL? 7

Amazon Keyspaces (for Apache Cassandra) Developer Guide

• Use AWS CloudShell and the cqlsh-expansion.

•

Use a cqlsh client.

• Use an Apache 2.0 licensed Cassandra client driver.

In addition to CQL, you can perform Data Definition Language (DDL) operations in Amazon

Keyspaces using the AWS SDKs and the AWS Command Line Interface.

For more information about using these methods to access Amazon Keyspaces, see Accessing

Amazon Keyspaces (for Apache Cassandra).

What is CQL? 8

Amazon Keyspaces (for Apache Cassandra) Developer Guide

How does Amazon Keyspaces (for Apache Cassandra)

compare to Apache Cassandra?

To establish a connection to Amazon Keyspaces, you can either use a public AWS service endpoint

or a private endpoint using Interface VPC endpoints (AWS PrivateLink) in the Amazon Virtual

Private Cloud . Depending on the endpoint used, Amazon Keyspaces can appear to the client in one

of the following ways.

AWS service endpoint connection

This is a connection established over any public endpoint. In this case, Amazon Keyspaces

appears as a nine-node Apache Cassandra 3.11.2 cluster to the client.

Interface VPC endpoint connection

This is a private connection established using an interface VPC endpoint. In this case, Amazon

Keyspaces appears as a three-node Apache Cassandra 3.11.2 cluster to the client.

Independent of the connection type and the number of nodes that are visible to the client, Amazon

Keyspaces provides virtually limitless throughput and storage. To do this, Amazon Keyspaces

maps the nodes to load balancers that route your queries to one of the many underlying storage

partitions. For more information about connections, see the section called “How they work”.

Amazon Keyspaces stores data in partitions. A partition is an allocation of storage for a table,

backed by solid state drives (SSDs). Amazon Keyspaces automatically replicates your data across

multiple Availability Zones within an AWS Region for durability and high availability. As your

throughput or storage needs grow, Amazon Keyspaces handles the partition management for you

and automatically provisions the required additional partitions.

Amazon Keyspaces supports all commonly used Cassandra data-plane operations, such as creating

keyspaces and tables, reading data, and writing data. Amazon Keyspaces is serverless, so you don’t

have to provision, patch, or manage servers. You also don’t have to install, maintain, or operate

software. As a result, in Amazon Keyspaces you don't need to use the Cassandra control plane API

operations to manage cluster and node settings.

Amazon Keyspaces automatically configures settings such as replication factor and consistency

level to provide you with high availability, durability, and single-digit-millisecond performance.

9

Amazon Keyspaces (for Apache Cassandra) Developer Guide

For even more resiliency and low-latency local reads, Amazon Keyspaces offers multi-Region

replication.

Topics

• Functional differences: Amazon Keyspaces vs. Apache Cassandra

• Supported Cassandra APIs, operations, functions, and data types

• Supported Apache Cassandra read and write consistency levels and associated costs

Functional differences: Amazon Keyspaces vs. Apache

Cassandra

The following are the functional differences between Amazon Keyspaces and Apache Cassandra.

Topics

• Apache Cassandra APIs, operations, and data types

• Asynchronous creation and deletion of keyspaces and tables

• Authentication and authorization

• Batch

• Cluster configuration

• Connections

• IN keyword

• CQL query throughput tuning

• FROZEN collections

• Lightweight transactions

• Load balancing

• Pagination

• Partitioners

• Prepared statements

• Range delete

• System tables

• Timestamps

Functional differences with Apache Cassandra 10

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Apache Cassandra APIs, operations, and data types

Amazon Keyspaces supports all commonly used Cassandra data-plane operations, such as creating

keyspaces and tables, reading data, and writing data. To see what is currently supported, see

Supported Cassandra APIs, operations, functions, and data types.

Asynchronous creation and deletion of keyspaces and tables

Amazon Keyspaces performs data definition language (DDL) operations, such as creating and

deleting keyspaces and tables, asynchronously. To learn how to monitor the creation status of

resources, see the section called “Check keyspace creation status” and the section called “Check

table creation status”. For a list of DDL statements in the CQL language reference, see the section

called “DDL statements”.

Authentication and authorization

Amazon Keyspaces (for Apache Cassandra) uses AWS Identity and Access Management (IAM)

for user authentication and authorization, and supports the equivalent authorization policies as

Apache Cassandra. As such, Amazon Keyspaces does not support Apache Cassandra's security

configuration commands.

Batch

Amazon Keyspaces supports unlogged batch commands with up to 30 commands in the batch.

Only unconditional INSERT, UPDATE, or DELETE commands are permitted in a batch. Logged

batches are not supported.

Cluster configuration

Amazon Keyspaces is serverless, so there are no clusters, hosts, or Java virtual machines (JVMs)

to configure. Cassandra’s settings for compaction, compression, caching, garbage collection, and

bloom filtering are not applicable to Amazon Keyspaces and are ignored if specified.

Connections

You can use existing Cassandra drivers to communicate with Amazon Keyspaces, but you need to

configure the drivers differently. Amazon Keyspaces supports up to 3,000 CQL queries per TCP

connection per second, but there is no limit on the number of connections a driver can establish.

Apache Cassandra APIs, operations, and data types 11

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Most open-source Cassandra drivers establish a connection pool to Cassandra and load balance

queries over that pool of connections. Amazon Keyspaces exposes 9 peer IP addresses to drivers,

and the default behavior of most drivers is to establish a single connection to each peer IP address.

Therefore, the maximum CQL query throughput of a driver using the default settings is 27,000 CQL

queries per second.

To increase this number, we recommend increasing the number of connections per IP address your

driver is maintaining in its connection pool. For example, setting the maximum connections per IP

address to 2 doubles the maximum throughput of your driver to 54,000 CQL queries per second.

As a best practice, we recommend configuring drivers to use 500 CQL queries per second per

connection to allow for overhead and to improve distribution. In this scenario, planning for 18,000

CQL queries per second requires 36 connections. Configuring the driver for 4 connections across 9

endpoints provides for 36 connections performing 500 request per second. For more information

about best practices for connections, see the section called “Connections”.

When connecting with VPC endpoints, there might be fewer endpoints available. This means that

you have to increase the number of connections in the driver configuration. For more information

about best practices for VPC connections, see the section called “VPC endpoint connections”.

IN keyword

Amazon Keyspaces supports the IN keyword in the SELECT statement. IN is not supported

with UPDATE and DELETE. When using the IN keyword in the SELECT statement, the results of

the query are returned in the order of how the keys are presented in the SELECT statement. In

Cassandra, the results are ordered lexicographically.

When using ORDER BY, full re-ordering with disabled pagination is not supported and results

are ordered within a page. Slice queries are not supported with the IN keyword. TOKENS are not

supported with the IN keyword. Amazon Keyspaces processes queries with the IN keyword by

creating subqueries. Each subquery counts as a connection towards the 3,000 CQL queries per TCP

connection per second limit. For more information, see the section called “Use IN SELECT”.

CQL query throughput tuning

Amazon Keyspaces supports up to 3,000 CQL queries per TCP connection per second, but there is

no limit on the number of connections a driver can establish.

Most open-source Cassandra drivers establish a connection pool to Cassandra and load balance

queries over that pool of connections. Amazon Keyspaces exposes 9 peer IP addresses to drivers,

IN keyword

12

Amazon Keyspaces (for Apache Cassandra) Developer Guide

and the default behavior of most drivers is to establish a single connection to each peer IP address.

Therefore, the maximum CQL query throughput of a driver using the default settings will be

27,000 CQL queries per second.

To increase this number, we recommend increasing the number of connections per IP address your

driver is maintaining in its connection pool. For example, setting the maximum connections per IP

address to 2 will double the maximum throughput of your driver to 54,000 CQL queries per second.

For more information about best practices for connections, see the section called “Connections”.

When connecting with VPC endpoints, fewer endpoints are available. This means that you have to

increase the number of connections in the driver configuration. For more information about best

practices for VPC endpoint connections, see the section called “VPC endpoint connections”.

FROZEN collections

The FROZEN keyword in Cassandra serializes multiple components of a collection data type into a

single immutable value that is treated like a BLOB. INSERT and UPDATE statements overwrite the

entire collection.

Amazon Keyspaces supports up to five levels of nesting for frozen collections by default. For more

information, see the section called “Amazon Keyspaces service quotas”.

Amazon Keyspaces doesn't support inequality comparisons that use the entire frozen collection in a

conditional UPDATE or SELECT statement. The behavior for collections and frozen collections is the

same in Amazon Keyspaces.

When you're using frozen collections with client-side timestamps, in the case where the timestamp

of a write operation is the same as the timestamp of an existing column that isn't expired or

tombstoned, Amazon Keyspaces doesn't perform comparisons. Instead, it lets the server determine

the latest writer, and the latest writer wins.

For more information about frozen collections, see the section called “Collection types”.

Lightweight transactions

Amazon Keyspaces (for Apache Cassandra) fully supports compare and set functionality on INSERT,

UPDATE, and DELETE commands, which are known as lightweight transactions (LWTs) in Apache

Cassandra. As a serverless offering, Amazon Keyspaces (for Apache Cassandra) provides consistent

performance at any scale, including for lightweight transactions. With Amazon Keyspaces, there is

no performance penalty for using lightweight transactions.

FROZEN collections

13

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Load balancing

The system.peers table entries correspond to Amazon Keyspaces load balancers. For best results,

we recommend using a round robin load-balancing policy and tuning the number of connections

per IP to suit your application's needs.

Pagination

Amazon Keyspaces paginates results based on the number of rows that it reads to process a

request, not the number of rows returned in the result set. As a result, some pages might contain

fewer rows than you specify in PAGE SIZE for filtered queries. In addition, Amazon Keyspaces

paginates results automatically after reading 1 MB of data to provide customers with consistent,

single-digit millisecond read performance. For more information, see the section called “Paginate

results”.

In tables with static columns, both Apache Cassandra and Amazon Keyspaces establish the

partition's static column value at the start of each page in a multi-page query. When a table has

large data rows, as a result of the Amazon Keyspaces pagination behavior, the likelihood is higher

that a range read operation result could return more pages for Amazon Keyspaces than for Apache

Cassandra. Consequently, there is a higher likelihood in Amazon Keyspaces that concurrent updates

to the static column could result in the static column value being different in different pages of the

range read result set.

Partitioners

The default partitioner in Amazon Keyspaces is the Cassandra-compatible Murmur3Partitioner.

In addition, you have the choice of using either the Amazon Keyspaces DefaultPartitioner or

the Cassandra-compatible RandomPartitioner.

With Amazon Keyspaces, you can safely change the partitioner for your account without having to

reload your Amazon Keyspaces data. After the configuration change has completed, which takes

approximately 10 minutes, clients will see the new partitioner setting automatically the next time

they connect. For more information, see the section called “Working with partitioners”.

Prepared statements

Amazon Keyspaces supports the use of prepared statements for data manipulation language (DML)

operations, such as reading and writing data. Amazon Keyspaces does not currently support the

Load balancing 14

Amazon Keyspaces (for Apache Cassandra) Developer Guide

use of prepared statements for data definition language (DDL) operations, such as creating tables

and keyspaces. DDL operations must be run outside of prepared statements.

Range delete

Amazon Keyspaces supports deleting rows in range. A range is a contiguous set of rows within a

partition. You specify a range in a DELETE operation by using a WHERE clause. You can specify the

range to be an entire partition.

Furthermore, you can specify a range to be a subset of contiguous rows within a partition by using

relational operators (for example, '>', '<'), or by including the partition key and omitting one or

more clustering columns. With Amazon Keyspaces, you can delete up to 1,000 rows within a range

in a single operation.

Range deletes are not isolated. Individual row deletions are visible to other operations while a

range delete is in process.

System tables

Amazon Keyspaces populates the system tables that are required by Apache 2.0 open-source

Cassandra drivers. The system tables that are visible to a client contain information that's unique to

the authenticated user. The system tables are fully controlled by Amazon Keyspaces and are read-

only. For more information, see the section called “System keyspaces”.

Read-only access to system tables is required, and you can control it with IAM access policies. For

more information, see the section called “Managing access using policies”. You must define tag-

based access control policies for system tables differently depending on whether you use the AWS

SDK or Cassandra Query Language (CQL) API calls through Cassandra drivers and developer tools.

To learn more about tag-based access control for system tables, see the section called “ Amazon

Keyspaces resource access based on tags”.

If you access Amazon Keyspaces using Amazon VPC endpoints, you see entries in the

system.peers table for each Amazon VPC endpoint that Amazon Keyspaces has permissions to

see. As a result, your Cassandra driver might issue a warning message about the control node itself

in the system.peers table. You can safely ignore this warning.

Timestamps

In Amazon Keyspaces, cell-level timestamps that are compatible with the default timestamps in

Apache Cassandra are an opt-in feature.

Range delete 15

Amazon Keyspaces (for Apache Cassandra) Developer Guide

The USING TIMESTAMP clause and the WRITETIME function are only available when client-side

timestamps are turned on for a table. To learn more about client-side timestamps in Amazon

Keyspaces, see the section called “Client-side timestamps”.

Supported Cassandra APIs, operations, functions, and data

types

Amazon Keyspaces (for Apache Cassandra) is compatible with Cassandra Query Language (CQL)

3.11 API (backward-compatible with version 2.x).

Amazon Keyspaces supports all commonly used Cassandra data-plane operations, such as creating

keyspaces and tables, reading data, and writing data.

The following sections list the supported functionality.

Topics

• Cassandra API support

• Cassandra control plane API support

• Cassandra data plane API support

• Cassandra function support

• Cassandra data type support

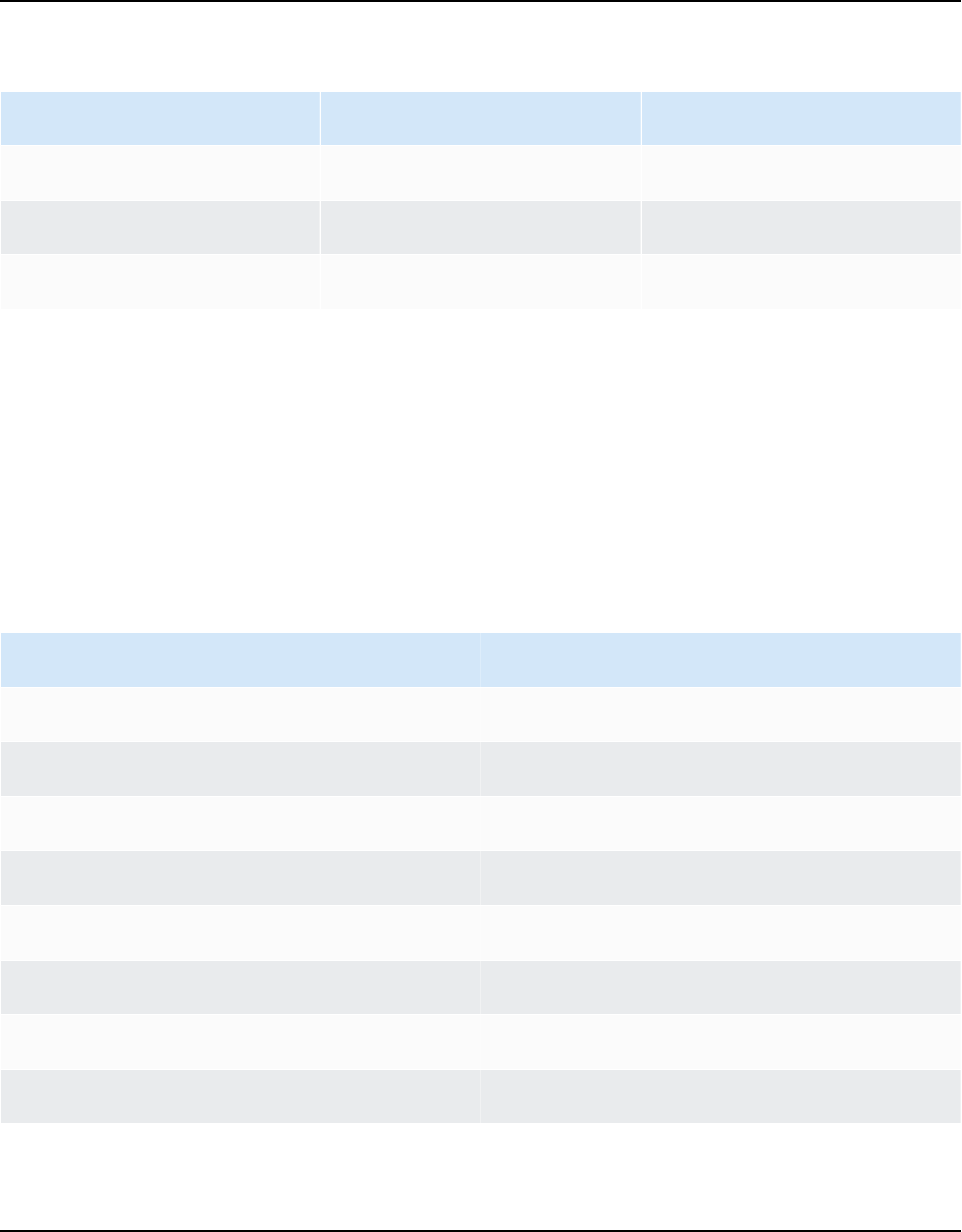

Cassandra API support

API operation Supported

CREATE KEYSPACE

Yes

ALTER KEYSPACE

Yes

DROP KEYSPACE

Yes

CREATE TABLE

Yes

ALTER TABLE

Yes

DROP TABLE

Yes

Supported Cassandra APIs, operations, functions, and data types 16

Amazon Keyspaces (for Apache Cassandra) Developer Guide

API operation Supported

CREATE INDEX

No

DROP INDEX

No

UNLOGGED BATCH

Yes

LOGGED BATCH

No

SELECT

Yes

INSERT

Yes

DELETE

Yes

UPDATE

Yes

USE

Yes

CREATE TYPE

No

ALTER TYPE

No

DROP TYPE

No

CREATE TRIGGER

No

DROP TRIGGER

No

CREATE FUNCTION

No

DROP FUNCTION

No

CREATE AGGREGATE

No

DROP AGGREGATE

No

CREATE MATERIALIZED VIEW

No

ALTER MATERIALIZED VIEW

No

Cassandra API support 17

Amazon Keyspaces (for Apache Cassandra) Developer Guide

API operation Supported

DROP MATERIALIZED VIEW

No

TRUNCATE

No

Cassandra control plane API support

Because Amazon Keyspaces is managed, the Cassandra control plane API operations to manage

cluster and node settings are not required. As a result, the following Cassandra features are not

applicable.

Feature Reason

Durable writes toggle All writes are durable

Read repair settings Not applicable

GC grace seconds Not applicable

Bloom filter settings Not applicable

Compaction settings Not applicable

Compression settings Not applicable

Caching settings Not applicable

Security settings Replaced by IAM

Cassandra data plane API support

Feature Supported

JSON support for SELECT and INSERT

statements

Yes

Static columns Yes

Cassandra control plane API support 18

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Feature Supported

Time to Live (TTL) Yes

Cassandra function support

For more information about the supported functions, see the section called “Built-in functions”.

Function Supported

Aggregate functions

No

Blob conversion

Yes

Cast

Yes

Datetime functions

Yes

Timeconversion functions Yes

TimeUuid functions

Yes

Token

Yes

User defined functions (UDF)

No

Uuid

Yes

Cassandra data type support

Data type Supported Note

ascii

Yes

bigint

Yes

blob

Yes

Cassandra function support 19

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Data type Supported Note

boolean

Yes

counter

Yes

date

Yes

decimal

Yes

double

Yes

float

Yes

frozen

Yes

inet

Yes

int

Yes

list

Yes

map

Yes

set

Yes

smallint

Yes

text

Yes

time

Yes

timestamp

Yes

timeuuid

Yes

tinyint

Yes

tuple

Yes

Cassandra data type support 20

Amazon Keyspaces (for Apache Cassandra) Developer Guide

Data type Supported Note

user-defined types

(UDT)

No To refactor UDTs with

Protocol Buffers, see Amazon

Keyspaces Protocol Buffers.

uuid

Yes

varchar

Yes

varint

Yes

Supported Apache Cassandra read and write consistency levels

and associated costs

The topics in this section describe which Apache Cassandra consistency levels are supported for

read and write operations in Amazon Keyspaces (for Apache Cassandra).

Topics

• Write consistency levels

• Read consistency levels

• Unsupported consistency levels

Write consistency levels

Amazon Keyspaces replicates all write operations three times across multiple Availability Zones

for durability and high availability. Writes are durably stored before they are acknowledged using

the LOCAL_QUORUM consistency level. For each 1 KB write, you are billed 1 write capacity unit

(WCU) for tables using provisioned capacity mode or 1 write request unit (WRU) for tables using

on-demand mode.

You can use cqlsh to set the consistency for all queries in the current session to LOCAL_QUORUM

using the following code.

CONSISTENCY LOCAL_QUORUM;

Supported Cassandra consistency levels 21

Amazon Keyspaces (for Apache Cassandra) Developer Guide

To configure the consistency level programmatically, you can set the consistency with the

appropriate Cassandra client drivers. For example, the 4.x version Java drivers allow you to set the

consistency level in the app config file as shown below.

basic.request.consistency = LOCAL_QUORUM

If you're using a 3.x version Java Cassandra driver, you can specify the

consistency level for the session by adding .withQueryOptions(new

QueryOptions().setConsistencyLevel(ConsistencyLevel.LOCAL_QUORUM) as shown in

the following code example.

Session session = Cluster.builder()

.addContactPoint(endPoint)

.withPort(portNumber)

.withAuthProvider(new SigV4AuthProvider("us-east-2"))

.withSSL()

.withQueryOptions(new

QueryOptions().setConsistencyLevel(ConsistencyLevel.LOCAL_QUORUM)

.build()

.connect();

To configure the consistency level for specific write operations, you can define the consistency

when you call QueryBuilder.insertInto with a setConsistencyLevel argument when

you're using the Java driver.

Read consistency levels

Amazon Keyspaces supports three read consistency levels: ONE, LOCAL_ONE, and LOCAL_QUORUM.

During a LOCAL_QUORUM read, Amazon Keyspaces returns a response reflecting the most recent

updates from all prior successful write operations. Using the consistency level ONE or LOCAL_ONE

can improve the performance and availability of your read requests, but the response might not

reflect the results of a recently completed write.