Phoenix WinNonlin

®

User’s Guide

Applies to:

Phoenix WinNonlin 8.3

Legal Notice

Phoenix® WinNonlin®, Phoenix NLME™, IVIVC Toolkit™, CDISC® Navigator, Certara Integral™, PK

Submit™, AutoPilot Toolkit™, Job Management System™ (JMS™), Trial Simulator™, Validation

Suite™ copyright ©2005-2020, Certara USA, Inc. All rights reserved. This software and the accompa-

nying documentation are owned by Certara USA, Inc. The software and the accompanying documen-

tation may be used only as authorized in the license agreement controlling such use. No part of this

software or the accompanying documentation may be reproduced, transmitted, or translated, in any

form or by any means, electronic, mechanical, manual, optical, or otherwise, except as expressly pro-

vided by the license agreement or with the prior written permission of Certara USA, Inc.

This product may contain the following software that is provided to Certara USA, Inc. under license:

ActiveX® 2.0.0.45 Copyright © 1996-2020, GrapeCity, Inc. AngleSharp 0.9.9 Copyright © 2013-2020

AngleSharp. All rights reserved. Autofac 4.8.1 Copyright © 2014 Autofac Project. All rights reserved.

Crc32.Net 1.2.0.5 Copyright © 2016 force. All rights reserved. Formula One® Copyright © 1993-2020

Open-Text Corporation. All rights reserved. Json.Net 7.0.1.18622 Copyright © 2007 James Newton-

King. All rights reserved. LAPACK Copyright © 1992-2013 The University of Tennessee and The Uni-

versity of Tennessee Research Foundation; Copyright © 2000-2013 The University of California

Berkeley; Copyright © 2006-2013 The University of Colorado Denver. All rights reserved. Microsoft®

.NET Framework Copyright 2020 Microsoft Corporation. All rights reserved. Microsoft XML Parser

version 3.0 Copyright 1998-2020 Microsoft Corporation. All rights reserved. MPICH2 1.4.1 Copyright

© 2002 University of Chicago. All rights reserved. Minimal Gnu for Windows (MinGW, http://

mingw.org/) Copyright © 2004-2020 Free Software Foundation, Inc. NLog Copyright © 2004-2020

Jaroslaw Kowalski <jaak@jkowalski.net>. All rights reserved. Reinforced.Typings 1.0.0 Copyright ©

2020 Reinforced Opensource Products Family and Pavel B. Novikov personally. All rights reserved.

RtfToHtml.Net 3.0.2.1 Copyright © 2004-2017, SautinSoft. All rights reserved. Sentinel RMS™

8.4.0.900 Copyright © 2006-2020 Gemalto NV. All rights reserved. Syncfusion® Essential Studio for

WinForms 16.4460.0.42 Copyright © 2001-2020 Syncfusion Inc. All rights reserved. TX Text Control

.NET for Windows Forms 26.0 Copyright © 19991-2020 Text Control, LLC. All rights reserved. Web-

sites Screenshot DLL 1.6 Copyright © 2008-2020 WebsitesScreenshot.com. All rights reserved. This

product may also contain the following royalty free software: CsvHelper 2.16.3.0 Copyright © 2009-

2020 Josh Close. DotNetbar 1.0.0.19796 (with custom code changes) Copyright © 1996-2020 Dev-

Components LLC. All rights reserved. ImageMagick® 5.0.0.0 Copyright © 1999-2020 ImageMagick

Studio LLC. All rights reserved. IMSL® Copyright © 2019-2020 Rogue Wave Software, Inc. All rights

reserved. Ninject 3.2 Copyright © 2007-2012 Enkari, Ltd. Software for Locally-Weighted Regression

Authored by Cleveland, Grosse, and Shyu. Copyright © 1989, 1992 AT&T. All rights reserved. SQLite

(https://www.sqlite.org/copyright.html). Ssh.Net 2016.0.0 by Olegkap Drieseng. Xceed® Zip Library

6.4.17456.10150 Copyright © 1994-2020 Xceed Software Inc. All rights reserved.

Information in the documentation is subject to change without notice and does not represent a com-

mitment on the part of Certara USA, Inc. The documentation contains information proprietary to Cer-

tara USA, Inc. and is for use by its affiliates' and designates' customers only. Use of the information

contained in the documentation for any purpose other than that for which it is intended is not autho-

rized. N

ONE OF CERTARA USA, INC., NOR ANY OF THE CONTRIBUTORS TO THIS DOCUMENT MAKES ANY REP-

RESENTATION OR WARRANTY, NOR SHALL ANY WARRANTY BE IMPLIED, AS TO THE COMPLETENESS,

ACCURACY, OR USEFULNESS OF THE INFORMATION CONTAINED IN THIS DOCUMENT, NOR DO THEY ASSUME

ANY RESPONSIBILITY FOR LIABILITY OR DAMAGE OF ANY KIND WHICH MAY RESULT FROM THE USE OF SUCH

INFORMATION.

Destination Control Statement

All technical data contained in the documentation are subject to the export control laws of the United

States of America. Disclosure to nationals of other countries may violate such laws. It is the reader's

responsibility to determine the applicable regulations and to comply with them.

United States Government Rights

This software and accompanying documentation constitute “commercial computer software” and

“commercial computer software documentation” as such terms are used in 48 CFR 12.212 (Sept.

1995). United States Government end users acquire the Software under the following terms: (i) for

acquisition by or on behalf of civilian agencies, consistent with the policy set forth in 48 CFR 12.212

(Sept. 1995); or (ii) for acquisition by or on behalf of units of the Department of Defense, consistent

with the policies set forth in 48 CFR 227.7202-1 (June 1995) and 227.7202-3 (June 1995). The manu-

facturer is Certara USA, Inc., 100 Overlook Center, Suite 101, Princeton, New Jersey, 08540.

Trademarks

AutoPilot Toolkit, Integral, IVIVC Toolkit, JMS, Job Management System, NLME, Phoenix, PK Submit,

Trial Simulator, Validation Suite, WinNonlin are trademarks or registered trademarks of Certara USA,

Inc. NONMEM is a registered trademark of ICON Development Solutions. S-PLUS is a registered

trademark of Insightful Corporation. SAS and all other SAS Institute Inc. product or service names are

registered trademarks or trademarks of SAS Institute Inc. in the USA and other countries.

Sentinel RMS is a trademark of Gemalto NV. Microsoft, MS, .NET, SQL Server Compact Edition, the

Internet Explorer logo, the Office logo, Microsoft Word, Microsoft Excel, Microsoft PowerPoint®, Win-

dows, the Windows logo, the Windows Start logo, and the XL design (the Microsoft Excel logo) are

trademarks or registered trademarks of Microsoft Corporation. Pentium 4 and Core 2 are trademarks

or registered trademarks of Intel Corporation. Adobe, Acrobat, Acrobat Reader, and the Adobe PDF

logo are registered trademarks of Adobe Systems Incorporated. All other brand or product names

mentioned in this documentation are trademarks or registered trademarks of their respective compa-

nies or organizations.

v

Contents

Phoenix WinNonlin . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1

Bioequivalence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5

Bioequivalence user interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .13

Bioequivalence overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .17

Covariance structure types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .18

Data limits and constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .19

Average bioequivalence study designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .21

Population and individual bioequivalence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .30

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .36

Bioequivalence model examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .38

Convolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .45

Input Mappings panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .45

UIR panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .46

Options tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .46

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .48

Convolution methodology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .49

Crossover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .51

Main Mappings panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .51

Options tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .51

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .52

Crossover methodology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .52

Data and assumptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .52

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .55

Crossover design example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .55

Deconvolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .59

User interface description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .59

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .62

Deconvolution methodology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .62

Deconvolution example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .69

Linear Mixed Effects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .73

User interface description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .73

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .82

General linear mixed effects model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .87

Linear mixed effects computations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .106

Linear mixed effects model examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .113

NCA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .119

NCA user interface description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .119

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .132

NCA computation rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .134

NCA parameter formulas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .146

NCA examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .156

Phoenix WinNonlin

User’s Guide

vi

NonParametric Superposition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

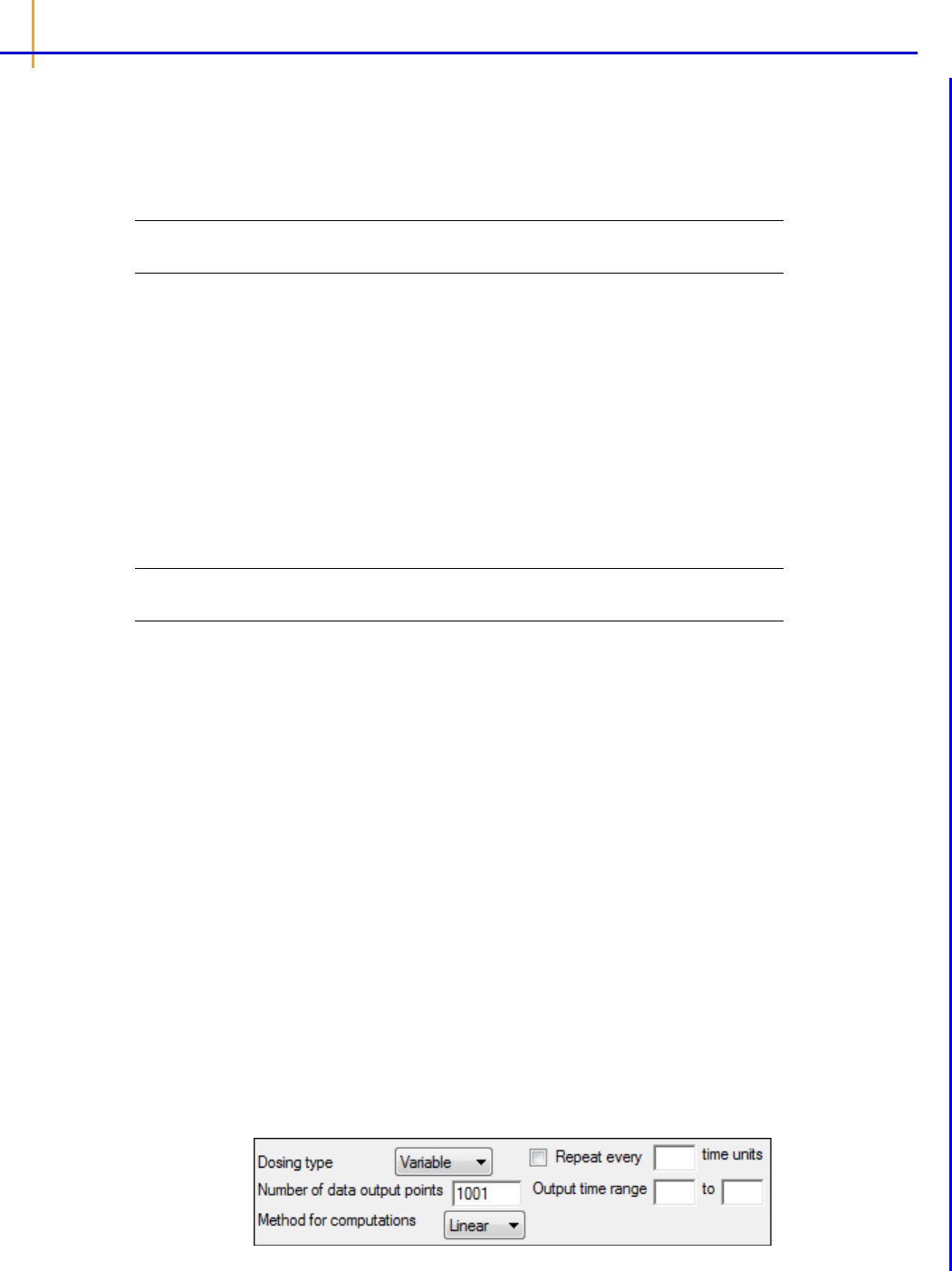

User interface description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

NonParametric Superposition methodology . . . . . . . . . . . . . . . . . . . . . . . . . . 186

NonParametric Superposition example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

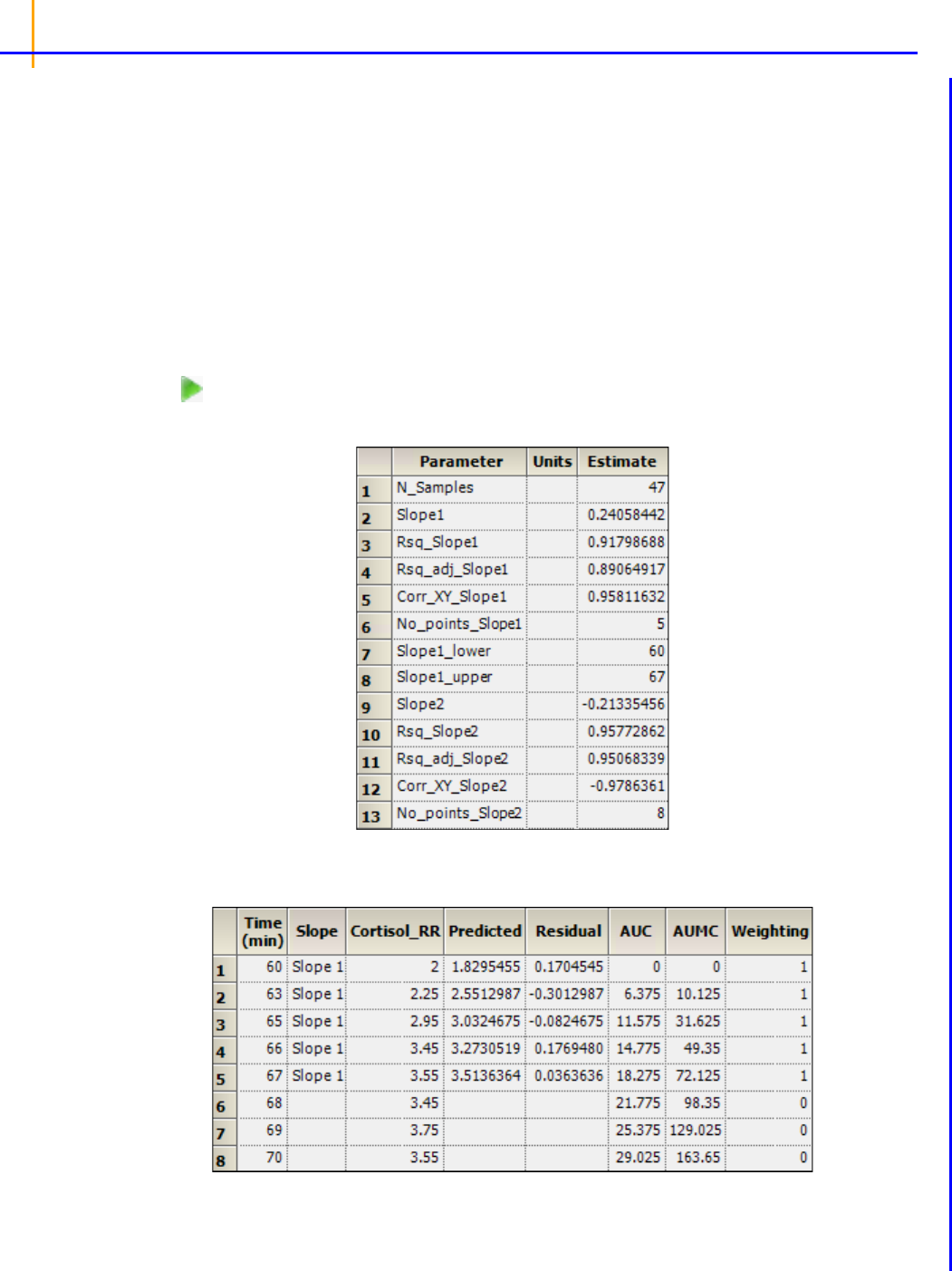

Semicompartmental Modeling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

User interface description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

Semicompartmental calculations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

Semicompartmental model example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

Modeling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .203

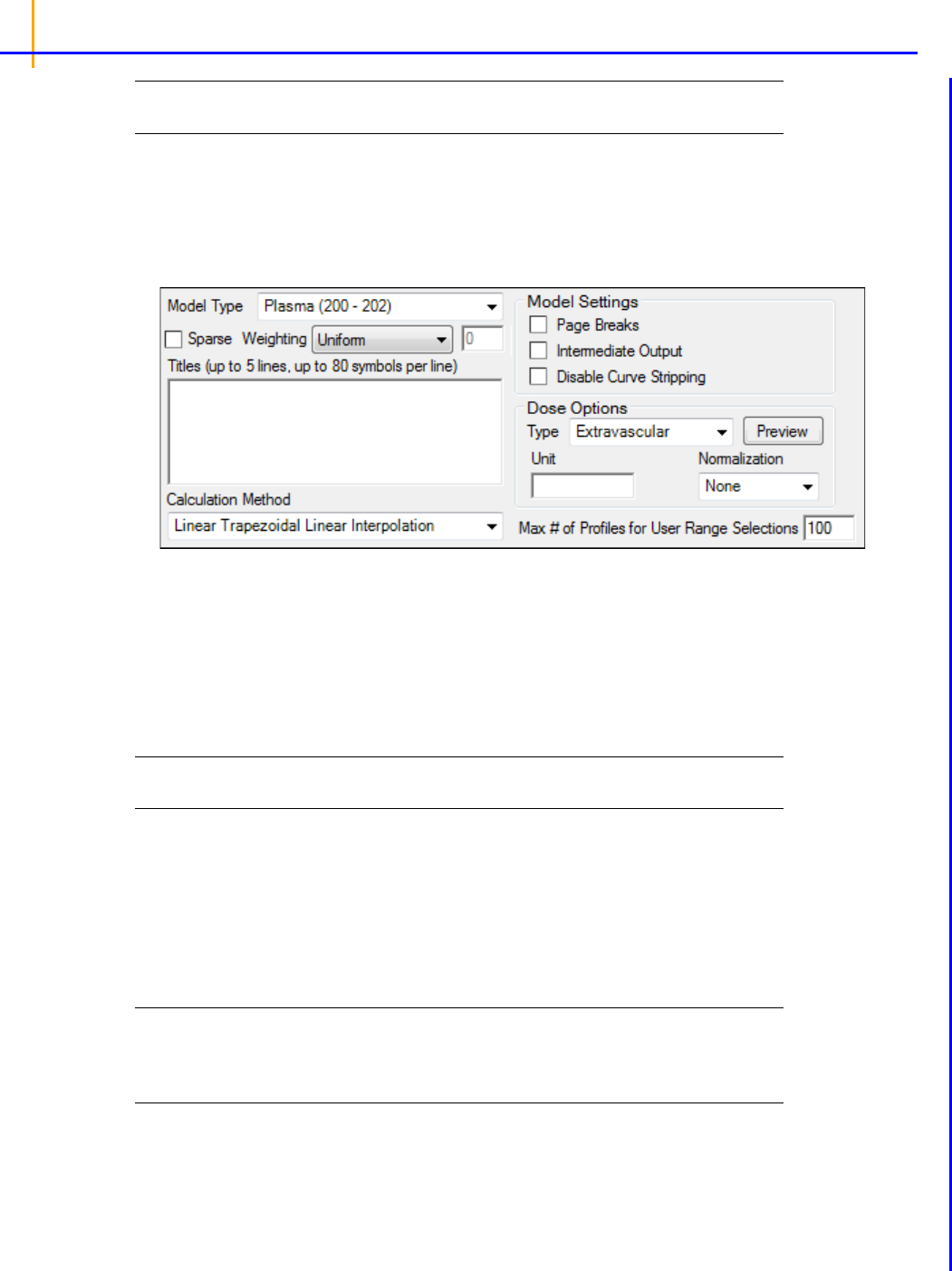

Least-Squares Regression Models interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

Main Mappings panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

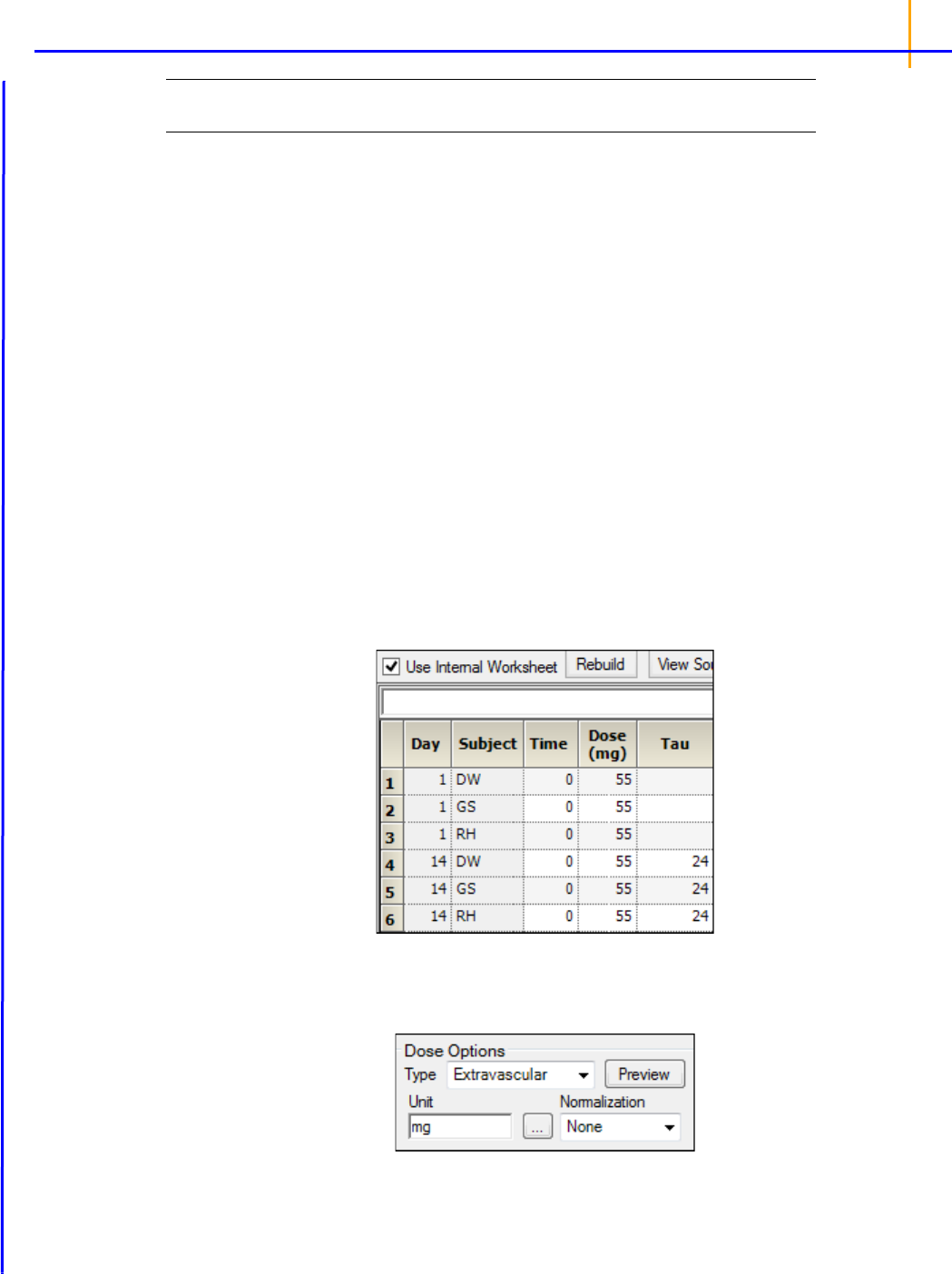

Dosing panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

Initial Estimates panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 206

PK Parameters panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 207

Units panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 207

Stripping Dose panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

Constants panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

Format panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

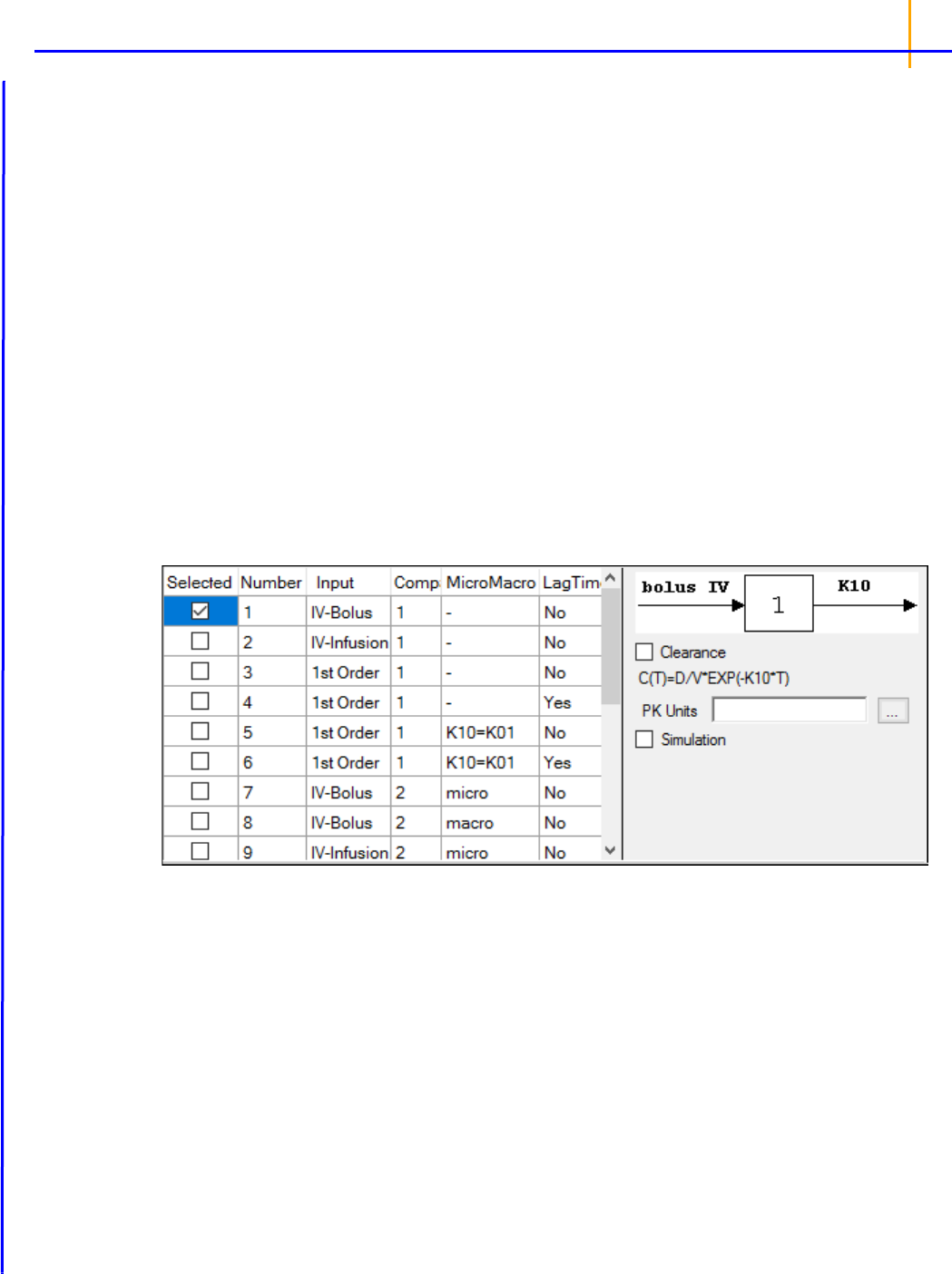

Model Selection tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

Linked Model tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 210

Weighting/Dosing Options tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

Parameter Options tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 213

Engine Settings tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

Least-Squares Regression Models Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

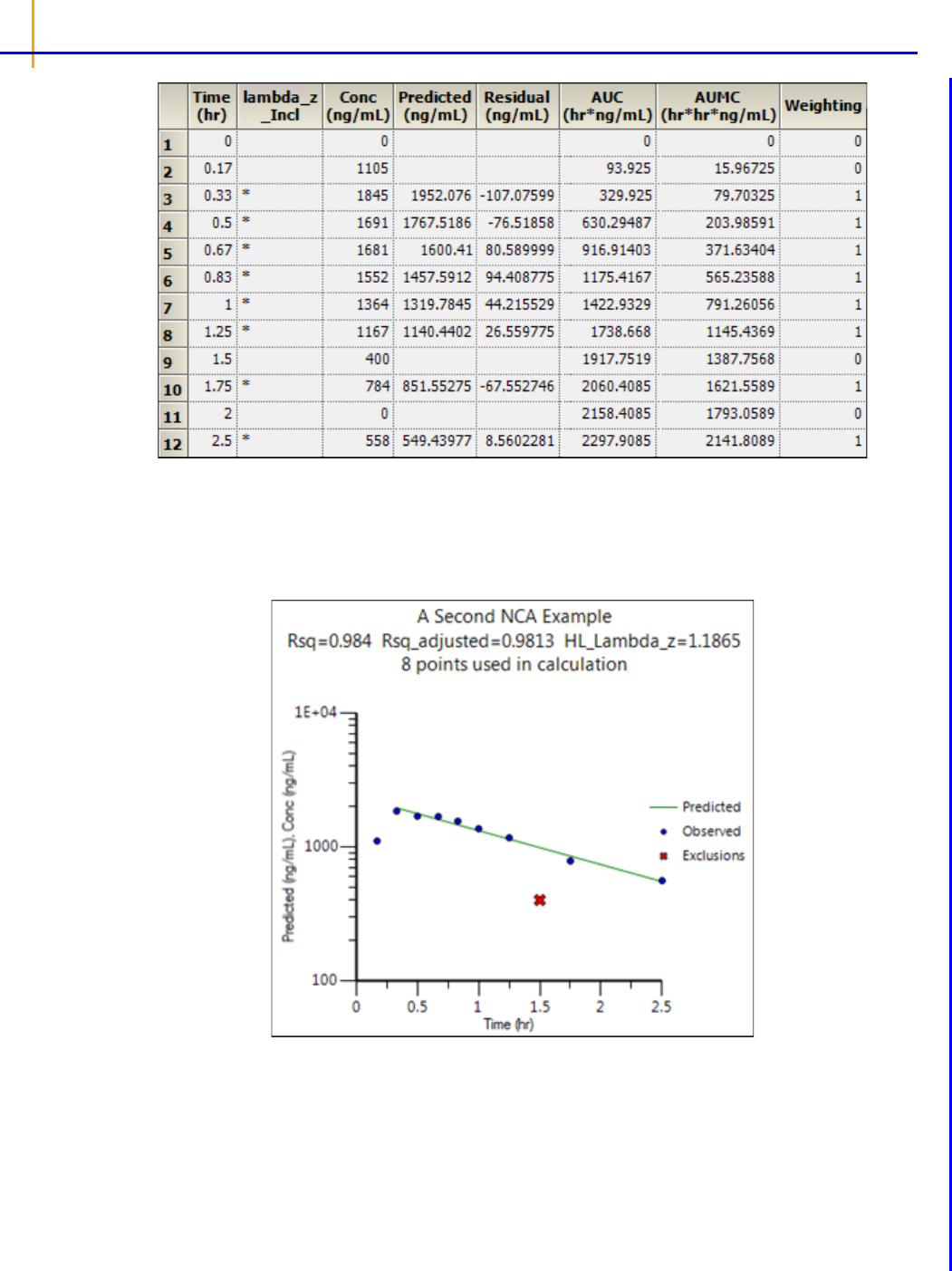

Worksheet output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216

Plot output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

Nonlinear Regression Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

Modeling and nonlinear regression . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

Model fitting algorithms and features of Phoenix WinNonlin . . . . . . . . . . . . . . 220

Least-Squares Regression Model calculations . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223

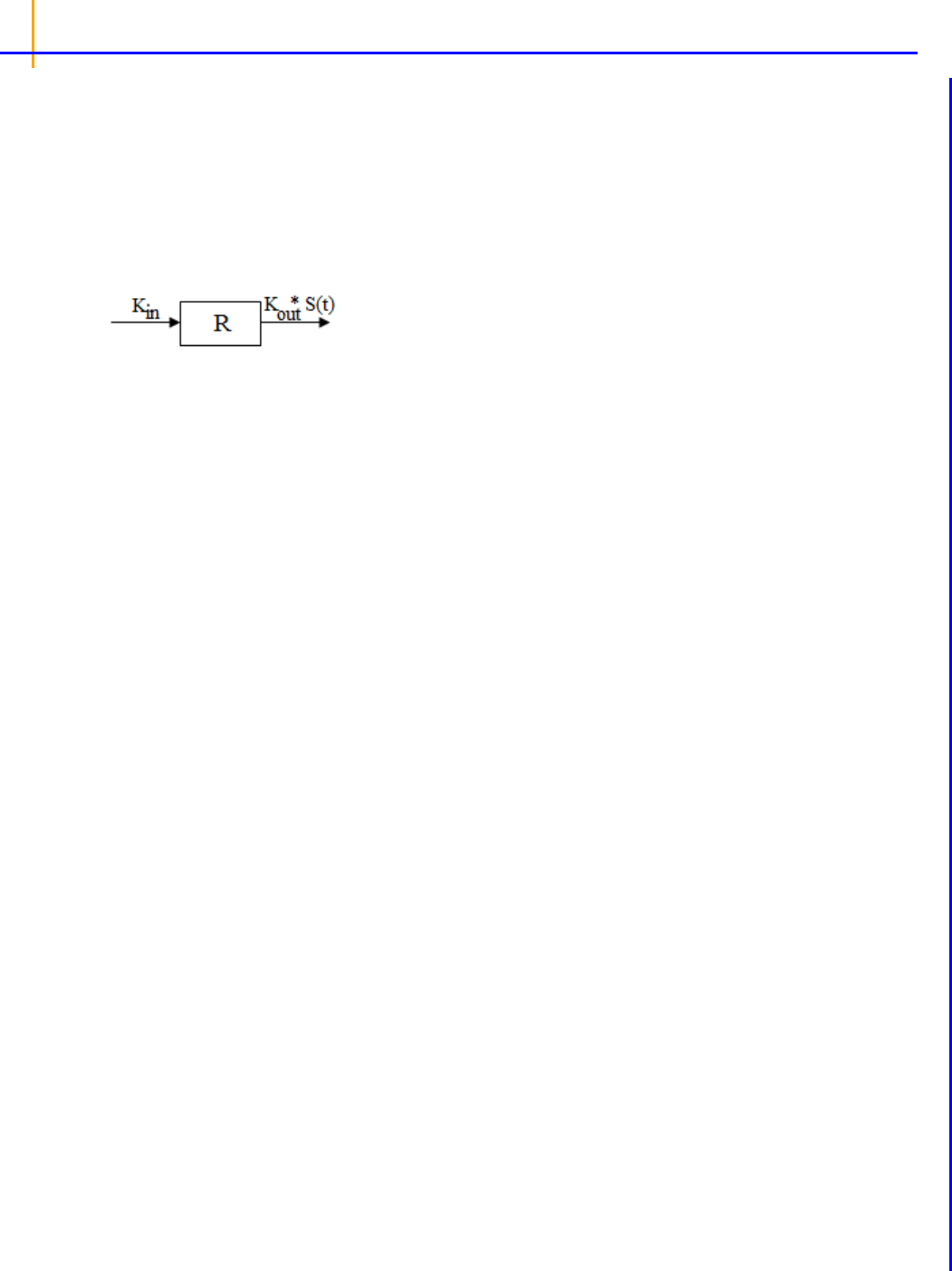

Indirect Response models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223

Linear models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

Michaelis-Menten models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

Pharmacodynamic models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

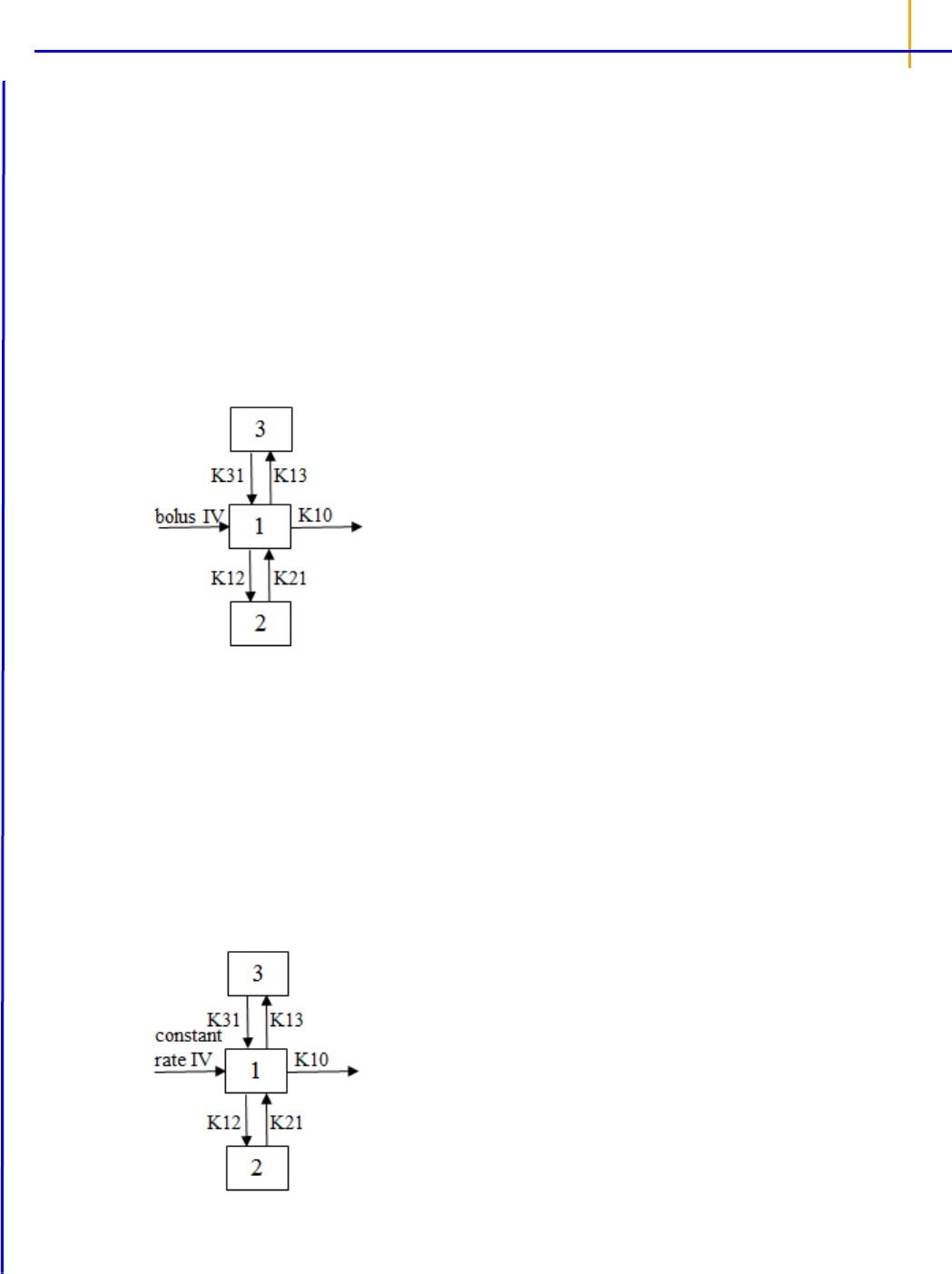

Pharmacokinetic models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

PD output parameters in a PK/PD model . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

ASCII Model dosing constants . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

Parameter Estimates and Boundaries Rules . . . . . . . . . . . . . . . . . . . . . . . . . . 243

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

PK model examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

Fit a PK model to data example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

Simulation and study design of PK models example . . . . . . . . . . . . . . . . . . . . 252

More nonlinear model examples . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . 256

Core Output File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .261

Commands, Arrays, and Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 264

1

Phoenix WinNonlin

Phoenix WinNonlin is a non-compartmental analysis (NCA), pharmacokinetic/pharmacodynamic (PK/

PD), and toxicokinetic (TK) modeling tool, with integrated tools for data processing, post-analysis pro-

cessing including statistical tests and bioequivalence analysis, table creation, and graphics. It is highly

suited for PK/PD modeling and noncompartmental analysis used to evaluate data from bioavailability

and clinical pharmacology studies.

Phoenix WinNonlin includes a large library of pharmacokinetic, pharmacodynamic, noncompartmen-

tal, PK/PD linked, and indirect response models. It also supports creation of custom models to enable

fitting and analysis of clinical data. It generates the graphs, tables, and output worksheets required for

regulatory submission.

Operational objects that are part of WinNonlin include:

NCA

Bioequivalence

Linear Mixed Effects

Crossover

Convolution

Deconvolution

NonParametric Superposition

Semicompartmental Modeling

Modeling (Dissolution, Indirect Response, Linear, Michaelis-Menten, Pharmacodynamic, Phar-

macokinetic, PK/PD Linked, User-defined ASCII)

References for the WinNonlin operational objects are provided in their descriptions. Additional refer-

ences are provided below.

Pharmacokinetics References

Hooker, Staatz, and Karlsson (2007). Conditional Weighted Residuals (CWRES): A Model Diagnostic

for the FOCE Method, Pharmaceutical Research, DOI:10.1007/s11095-007-9361-x.

Brown and Manno (1978). ESTRIP, A BASIC computer program for obtaining initial polyexponential

parameter estimates. J Pharm Sci 67:1687–91.

Chan and Gilbaldi (1982). Estimation of statistical moments and steady-state volume of distribution

for a drug given by intravenous infusion. J Pharm Bioph 10(5):551–8.

Cheng and Jusko (1991). Noncompartmental determination of mean residence time and steady-state

volume of distribution during multiple dosing, J Pharm Sci 80:202.

Dayneka, Garg and Jusko (1993). Comparison of four basic models of indirect pharmacodynamic

responses. J Pharmacokin Biopharm 21:457.

Endrenyi, ed. (1981). Kinetic Data Analysis: Design and Analysis of Enzyme and Pharmacokinetic

Experiments. Plenum Press, New York.

Gabrielsson and Weiner (2016). Pharmacokinetic and Pharmacodynamic Data Analysis: Concepts

and Applications, 5

th

ed. Apotekarsocieteten, Stockholm.

Gibaldi and Perrier (1982). Pharmacokinetics, 2nd ed. Marcel Dekker, New York.

Gouyette (1983). Pharmacokinetics: Statistical moment calculations. Arzneim-Forsch/Drug Res 33

(1):173–6.

Holford and Sheiner (1982). Kinetics of pharmacological response. Pharmacol Ther 16:143.

Phoenix WinNonlin

User’s Guide

2

Jusko (1990). Corticosteroid pharmacodynamics: models for a broad array of receptor-mediated

pharmacologic effects. J Clin Pharmacol 30:303.

Koup (1981). Direct linear plotting method for estimation of pharmacokinetic parameters. J Pharm Sci

70:1093–4.

Kowalski and Karim (1995). A semicompartmental modeling approach for pharmacodynamic data

assessment. J Pharmacokinet Biopharm 23(3):307–22.

Metzler and Tong (1981). Computational problems of compartmental models with Michaelis-Menten-

type elimination. J Pharmaceutical Sciences 70:733–7.

Nagashima, O’Reilly and Levy (1969). Kinetics of pharmacologic effects in man: The anticoagulant

action of warfarin. Clin Pharmacol Ther 10:22.

Wagner (1975). Fundamentals of Clinical Pharmacokinetics. Drug Intelligence, Illinois.

Regression and modeling References

Akaike (1978). Posterior probabilities for choosing a regression model. Annals of the Institute of Math-

ematical Statistics 30:A9–14.

Allen and Cady (1982). Analyzing Experimental Data By Regression. Lifetime Learning Publications,

Belmont, CA.

Bard (1974). Nonlinear Parameter Estimation. Academic Press, New York.

Bates and Watts (1988). Nonlinear Regression Analyses and Its Applications. John Wiley & Sons,

New York.

Beck and Arnold (1977). Parameter Estimation in Engineering and Science. John Wiley & Sons, New

York.

Belsley, Kuh and Welsch (1980). Regression Diagnostics. John Wiley & Sons, New York.

Corbeil and Searle (1976). Restricted maximum likelihood (REML) estimation of variance compo-

nents in the mixed models, Technometrics, 18:31–8.

Cornell (1962). A method for fitting linear combinations of exponentials. Biometrics 18:104–13.

Davies and Whitting (1972). A modified form of Levenberg's correction. Chapter 12 in Numerical

Methods for Non-linear Optimization. Academic Press, New York.

DeLean, Munson and Rodbard (1978). Simultaneous analysis of families of sigmoidal curves: Appli-

cation to bioassay, radioligand assay and physiological dose-response curves. Am J Physiol

235(2):E97–E102.

Draper and Smith (1981). Applied Regression Analysis, 2nd ed. John Wiley & Sons, NY.

Fai and Cornelius (1996). Approximate f-tests of multiple degree of freedom hypotheses in general-

ized least squares analysis of unbalanced split-plot experiments. J Stat Comp Sim 554:363–78.

Fletcher (1980). Practical methods of optimization, Vol. 1: Unconstrained Optimization. John Wiley &

Sons, New York.

Foss (1970). A method of exponential curve fitting by numerical

integration. Biometrics 26:815–21.

Giesbrecht and Burns (1985). Two-stage analysis based on a mixed model: Large sample asymptotic

theory and small-sample simulation results. Biometrics 41:477–86.

Gill, Murray and Wright (1981). Practical Optimization. Academic Press.

Gomeni and Gomeni (1979). AUTOMOD: A Polyalgorithm for an integrated analysis of linear pharma-

cokinetic models. Comput Biol Med 9:39–48.

3

Hartley (1961). The modified Gauss-Newton method for the fitting of nonlinear regression functions by

least squares. Technometrics 3:269–80.

Jennrich and Moore (1975). Maximum likelihood estimation by means of nonlinear least squares.

Amer Stat Assoc Proceedings Statistical Computing Section 57–65.

Kennedy and Gentle (1980). Statistical Computing. Marcel Dekker, New York.

Koch (1972). The use of nonparametric methods in the statistical analysis of the two-period change-

over design. Biometrics 577–84.

Kowalski and Karim (1995). A semicompartmental modeling approach for pharmacodynamic data

assessment, J Pharmacokinet Biopharm 23:307–22.

Leferink and Maes (1978). STRIPACT, An interactive curve fit programme for pharmacokinetic analy-

ses. Arzneim Forsch 29:1894–8.

Longley (1967). Journal of the American Statistical Association, 69:819–41.

Nelder and Mead (1965). A simplex method for function minimization. Computing Journal 7:308–13.

Parsons (1968). Biological problems involving sums of exponential functions of time: A mathematical

analysis that reduces experimental time. Math Biosci 2:123–8.

PCNonlin. Scientific Consulting Inc., North Carolina, USA.

Peck and Barrett (1979). Nonlinear least-squares regression programs for microcomputers. J Phar-

macokinet Biopharm 7:537–41.

Ratkowsky (1983). Nonlinear Regression Modeling. Marcel Dekker, New York.

Satterthwaite (1946). An approximate distribution of estimates of variance components. Biometrics

Bulletin 2:110–4.

Schwarz (1978). Estimating the dimension of a model. Annals of Statistics 6:461–4.

Sedman and Wagner (1976). CSTRIP, A FORTRAN IV Computer Program for Obtaining Polyexpo-

nential Parameter Estimates. J Pharm Sci 65:1001–10.

Shampine, Watts and Davenport (1976). Solving nonstiff ordinary differential equations - the state of

the art. SIAM Review 18:376–411.

Sheiner, Stanski, Vozeh, Miller and Ham (1979). Simultaneous modeling of pharmacokinetics and

pharmacodynamics: application to d-tubocurarine. Clin Pharm Ther 25:358–71.

Smith and Nichols (1983). Improved resolution in the analysis of the multicomponent exponential sig-

nals. Nuclear Instrum Meth 205:479–83.

Tong and Metzler (1980). Mathematical properties of compartment models with Michaelis-Menten-

type elimination. Mathematical Biosciences 48:293–306.

Wald (1943). Tests of statistical hypotheses concerning several parameters when the number of

observations is large. Transaction of the American Mathematical Society 54.

Phoenix WinNonlin

User’s Guide

4

Bioequivalence

5

Bioequivalence

Defined as relative bioavailability, bioequivalence involves comparison between test and reference

drug products, where the test and reference products can vary, depending upon the comparison to be

performed. Although bioavailability and bioequivalence are closely related, bioequivalence compari-

sons rely on a criterion, a predetermined bioequivalence limit, and calculation of a interval for that cri-

terion.

Use one of the following to add the object to a Workflow:

Right-click menu for a Workflow object: New > Computation Tools > Bioequivalence.

Main menu: Insert > Computation Tools > Bioequivalence.

Right-click menu for a worksheet: Send To > Computation Tools > Bioequivalence.

Note: To view the object in its own window, select it in the Object Browser and double-click it or press

ENTER. All instructions for setting up and execution are the same whether the object is viewed in

its own window or in Phoenix view.

This section contains information on the following topics:

Bioequivalence user interface

Results

Bioequivalence overview

Covariance structure types

Data limits and constraints

Average bioequivalence study designs

Population and individual bioequivalence

References

Bioequivalence model examples

Bioequivalence user interface

Main Mappings panel

Model tab

Fixed Effects tab

Variance Structure tab

Options tab

General Options tab

The Bioequivalence model object is based on a mixed effects model. For more see “General linear

mixed effects model”

.

Main Mappings panel

Use the Main Mappings panel to identify how input variables are used in a bioequivalence model. A

separate analysis is performed for each profile, or unique level of soft key(s). Required input is high-

lighted orange in the interface.

None: Data types mapped to this context are not included in any analysis or output.

Sort: Categorical variable(s) identifying individual data profiles, such as subject ID and treatment

in a crossover study. A separate analysis is done for each unique combination of sort variable val-

ues.

Subject: The subjects in a dataset.

Sequence: The order of drug administration.

Phoenix WinNonlin

User’s Guide

6

Period: The washout period, or the time period between two treatments needed for drug elimina-

tion. Only applicable in a crossover study.

Formulation: The treatment and reference drug formulations used in a study.

Dependent: The dependent variables, such as drug concentration, that provides the values used

to fit the model.

Classification: Classification variables or factors that are categorical independent variables,

such as formulation, treatment, and gender.

Regressors: Regressor variables or covariates that are continuous independent variables, such

as gender or body weight. The regressor variable can also be used to weight the dataset.

Input data considerations

Missing data: For population and individual bioequivalence, the application assumes complete

data for each subject. If a subject has a missing observation, that subject is not included in the

analysis. If the data have many missing observations, consider imputing estimated values to pro-

duce complete records per subject. Phoenix does not impute missing values.

Variable name and data limits: See

“Data limits and constraints”.

Note: Be sure to finalize column names in your input data before sending the data to the Bioequivalence

object. Changing names after the object is set up can cause the execution to fail.

Model tab

The Model tab allows users to specify the bioequivalence model.

• Under Type of study, select the Parallel/Other or Crossover option buttons to select the type of

study.

• Under Type of Bioequivalence, select the Average or Population/Individual option buttons to

select the type of bioequivalence. Crossover studies are the only study type permitted when pop-

ulation or individual are the types of bioequivalence being determined.

• In the Reference Formulation menu, select the reference formulation or treatment. The menu is

only available after a formulation variable is mapped.

The Bioequivalence object automatically sets up default fixed effects, random effects, and repeated

models for average bioequivalence studies depending on the type of study design: replicated cross-

over, nonreplicated crossover, or parallel. For more details on the default settings, see, see

“Average

bioequivalence study designs”

.

For more information on population and individual bioequivalence, see

“Population and individual bio-

equivalence”

.

Bioequivalence

7

Fixed Effects tab

The Fixed Effects tab allows users to specify settings for study variables used in an average bioequiv-

alence model. Population and individual bioequivalence models do not use fixed effects, so most

options in the Fixed Effects tab are unavailable for population or individual bioequivalence models.

Average bioequivalence

For average bioequivalence models the Model Specification field automatically displays an appropri-

ate fixed effects model for the study type. Edit the model as needed.

Phoenix automatically specifies average bioequivalence models based on the study type selected

and the dataset used. These default models are based on US FDA Guidance for Industry (January

2001).

See the following for details on the models used in a particular study type:

Replicated crossover designs

Nonreplicated crossover designs

Parallel designs

Study variables in the Classification box and the Regressors/Covariates box can be dragged to the

Model Specification field to create the model structure.

• Drag variables from the Classification and the Regressors/Covariates boxes to the Model

Specification field and click the operator buttons to build the model or type the names and oper-

ators directly in the field.

+ addition,

* multiplication,

() parentheses for indicating nested variables in the model

Below are some guidelines for using parentheses:

• Parentheses in the model specification represent nesting of model terms.

•

Seq+Subject(Seq)+Period+Form is a valid use of parentheses and indicates that

Subject is nested within Seq.

•

Drug+Disease+(Drug*Disease) is not a valid use of parentheses in the model specifi-

cation.

• Select a weight variable from the Regressors/Covariates box and drag it to the Weight Variable

field.

To remove the weight variable, drag the variable from the Weight Variable field back to the

Regressors/Covariates box.

The Regressors/Covariates box lists variables mapped to the Regressors context (in the Main

Mappings panel). If a variable is used to weight the data then the variable is displayed in the

Regressors/Covariates box. Below are some guidelines for using weight variables:

Phoenix WinNonlin

User’s Guide

8

• The weights for each record must be included in a separate column in the dataset.

• Weight variables are used to compensate for observations having different variances.

• When a weight variable is specified, each row of data is multiplied by the square root of the

corresponding weight.

• Weight variable values should be proportional to the reciprocals of the variances. Typically,

the data are averages and weights are sample sizes associated with the averages.

• The Weight variable cannot be a classification variable. It must be declared as a regressor/

covariate before it can be used as a weight variable. It can also be used in the model.

• In the Dependent Variables Transformation menu, select one of the transformation options:

None

Ln(x): Linear transformation

Log10(x): Logarithmic base 10 transformation

Already Ln-transformed: Select if the dependent variable values are already transformed.

Already Log10-transformed: Select if the dependent variable values are already transformed.

•In the Fixed Effects Confidence Level box, type the level for the fixed effects model. The default

value is 95%.

• By default, the intercept term is included in the model (although it is not shown in the Model Spec-

ification field), check the No Intercept checkbox to remove the intercept term.

• Use the Test Numerator and Test Denominator fields to specify an additional test of hypothesis

in the case of a model with only fixed effects.

For this case, the default error term (denominator) is the residual error, so an alternate test can be

requested by entering the fixed effects model terms to use for the numerator and denominator of

the F-test. The terms entered must be in the fixed effects model and the random/repeated models

must be empty for the test to be performed. (See

“Tests of hypotheses” for additional information.)

• In the Dependent Variables Transformation menu, select Ln(x) or Already Ln-transformed.

Ln(x): Linear transformation

Already Ln-transformed: Select if the dependent variable values are already transformed.

Variance Structure tab

The Variance Structure tab allows users to set random effects and repeated specification for the bio-

equivalence model. Users can also set traditional variance components and random coefficients. The

Variance Structure tab is only available for average bioequivalence models.

Bioequivalence

9

Users can specify none, one, or multiple random effects. The random effects specify Z and the corre-

sponding elements of

G=Var(). Users can specify only one repeated effect. The repeated effect

specifies the

R=Var().

Phoenix automatically specifies random effects models and repeated specifications for average bio-

equivalence models. For more on the default models and specifications, see

“Recommended models

for average bioequivalence”

.

The random effects model can be created using the classification variables.

• Use the pointer to drag the variables from the Classification Variables box to Random 1 tab.

• Users can also type variable names in the fields in the Random 1 tab.

The random effects model can be created using the regressor or covariate variables.

• Use the pointer to drag the variables from the Regressors/Covariates box to Random 1 tab.

• Users can also type variable names in the fields in the Random 1 tab.

Random 1 and Repeated tabs

The Random 1 tab is used to add random effects to the model. The random effects are built using the

classification variables, the regressors/covariates variables, and the operator buttons.

The Repeated tab is used to specify the R matrix in the mixed model. If no repeated statement is

specified, R is assumed to be equal to

I. The repeated effect must contain only classification vari-

ables.

The default repeated model depends on whether the crossover study is replicated or not.

Caution: The same variable cannot be used in both the fixed effects specification and the random effects

specification unless it is used differently, such as part of a product. The same term (single vari-

ables, products, or nested variables) must not appear in both specifications.

• Drag variables from the boxes on the left to the fields in the tab and click the operator buttons to

build the model or type the names and operators directly in the fields.

+ addition (not available in the Repeated tab or when specifying the variance blocking or group

variables),

* multiplication,

() parentheses for indicating nested variables in the model.

•The Variance Blocking Variables (Subject) field is optional and, if specified, must be a classifi-

cation model term built from the items in the Classification Variables box. This field is used to

identify the subjects in a dataset. Complete independence is assumed among subjects, so the

subject variable produces a block diagonal structure with identical blocks.

•The Group field is also optional and, if specified, must be a classification model term built from

items in the Classification Variables box. It defines an effect specifying heterogeneity in the

covariance structure. All observations having the same level of the group effect have the same

covariance parameters. Each new level of the group effect produces a new set of covariance

parameters with the same structure as the original group.

• (Random 1 tab only) Check the Random Intercept checkbox to set the intercept to random.

This setting is commonly used when a subject is specified in the Variance Blocking Variables

(Subject) field. The default setting is no random intercept.

• If the model contains random effects, the covariance structure type must be specified from the

Type menu.

Phoenix WinNonlin

User’s Guide

10

If Banded Unstructured (b), Banded No-Diagonal Factor Analytic (f), or Banded Toeplitz (b)

is selected, type the number of bands in the Number of bands(b) field (default is 1).

The number of factors or bands corresponds to the dimension parameter. For some covariance

structure types this is the number of bands and for others it is the number of factors. For explana-

tions of covariance structure types, see

“Covariance structure types”.

• Click Add Random to add additional variance models.

• Click Delete Random to delete a variance model.

Options tab

Settings in the Options tab change depending on whether the model type is average or population/

individual.

Average bioequivalence options

• In the Confidence Level field, type the level for the bioequivalence model (default is 90%).

• In the Percent of Reference to Detect field, type the percentage of reference treatment to detect

(default is 20%).

• In the Anderson-Hauck Lower Limit field, type the lower limit for the Anderson-Hauck test

(default is 0.8).

• In the Anderson-Hauck Upper Limit field, type the upper limit for the Anderson-Hauck test

(default is 1.25 for log-transformed data and 1.2 for non-transformed data).

For more on the Anderson-Hauck test, see

“Anderson-Hauck test”.

Population/Individual bioequivalence options

• In the Confidence Level field, type the level for the bioequivalence model (default is 95%).

• In the Percent of Reference to Detect field, type the percentage of reference treatment to detect

(default is 20%).

• In the Total SD Standard field in the Population Limits group, type the value of the total slope

distance (default is 0.2).

•In the Epsilon field in the Population Limits group, type an epsilon value for the population limits

(default is 0.02).

• In the Within Subject SD Standard field in the Individual Limits group, type the value of the

within subject slope distance (default is 0.2).

• In the Epsilon field in the Individual Limits group, type an epsilon value for the individual limits

(default is 0.05).

Bioequivalence

11

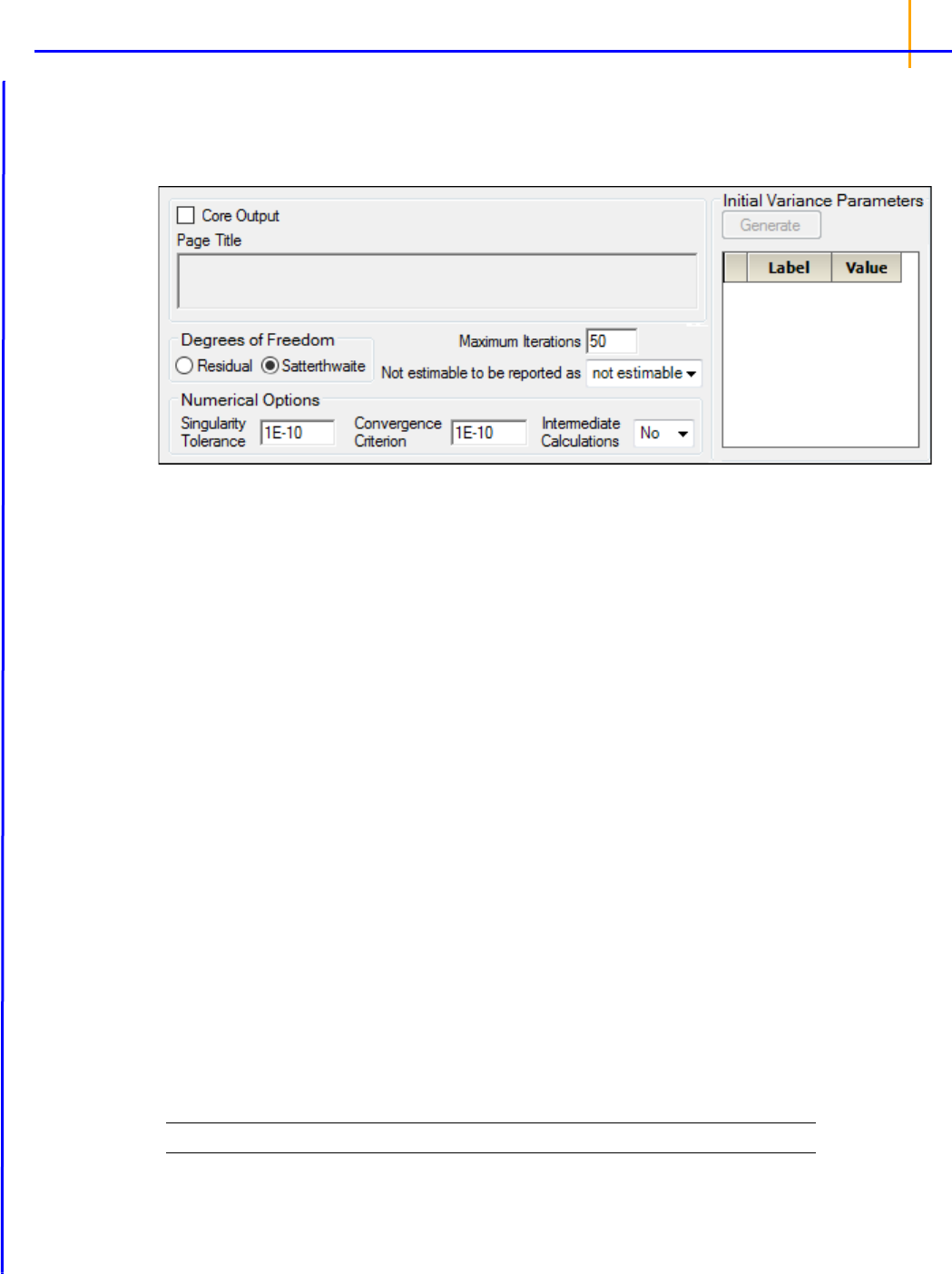

General Options tab

The General Options tab is used to set output and calculation options for a bioequivalence model.

The options change depending on whether the model is average bioequivalence or population/individ-

ual bioequivalence.

Average bioequivalence options

•Check the Core Output checkbox to include the Core Output text file in the results.

• In the Page Title field, type a title for the Core Output text file.

• Choose the degrees of freedom calculation method:

Residual: The same as the calculation method used in a purely fixed effects model.

Satterthwaite: The default setting and computes the df base on

approximation to distribution

of variance.

• In the Maximum Iterations field, type the number of maximum iterations. This is the number of

iterations in the Newton fitting algorithm. The default setting is 50.

• Use the Not estimable to be reported as menu to determine how output that is not estimable is

represented.

• not estimable

•0 (zero)

• In the Singularity Tolerance field, type the tolerance level. The columns in X and Z are elimi-

nated if their norm is less than or equal to this number. (Default tolerance value is 1E–10.)

• In the Convergence Criterion field, type the criterion used to determine if the model has con-

verged (default is 1E–10).

• In the Intermediate Calculations menu, select whether (Yes) or not (No) to include the design

matrix, reduced data matrix, asymptotic covariance matrix of variance parameters, Hessian, and

final variance matrix in the Core Output text file.

• In the Initial Variance Parameters group, click Generate to edit the initial variance parameters

values.

Note: The Generate initial variance parameters option is available only if the model uses random effects.

• Select a cell in the Value column and type a value for the parameter (default is 1).

If the values are not specified, then Phoenix uses the method of moments estimates.

Phoenix WinNonlin

User’s Guide

12

To delete one or more of the parameters from the table:

• Highlight the row(s).

•Select Edit >> Delete from the menubar or press X in the main toolbar.

• Click the Selected Row(s) option button and click OK.

Population/Individual bioequivalence options

• Select the Core Output checkbox to include the Core Output text file in the results.

• In the Page Title field, type a title for the Core Output text file.

13

Results

The Bioequivalence object creates several output worksheets. Each type of bioequivalence model

also creates a text file with model settings. If the Core Output checkbox is selected, then a Core Out-

put text file that contains model output is added to the results.

Average bioequivalence output

Population/Individual bioequivalence output

Text output

Ratios test

Average bioequivalence output

• Average Bioequivalence: Output from the bioequivalence analysis. Columns include:

Dependent: Input data column mapped to the Dependent context.

Units: Units, if specified in the input dataset.

FormVar: Input data column mapped to the Formulation context.

FormRef: Reference formulation.

RefLSM: Reference least squares mean.

RefLSM_SE: Standard error computed for reference least squares mean.

RefGeoLSM: Geometric reference least squares mean.

Test: Test formulation.

TestLSM: Test least squares mean.

TestLSM_SE: Standard error computed for test least squares mean.

TestGeoLSM: Geometric test least squares mean.

Difference: Difference between test and reference LSM values.

Diff_SE: Standard error of the difference in LSM values.

Diff_DF: Degrees of freedom for the difference in LSM values.

Ratio_%REF_: Percent ration of test LMS to reference LSM values.

(See

“Least squares means” for more details.)

CI_xx_Lower(Upper): Confidence interval computations. (See

“Classical intervals” for more

details.)

t1_TOST: Left-tail test result.

t2_TOST: Right-tail test result.

Prob_80(125)_00: Probability results for the two one-sided t-tests.

MaxProb: Largest value of the probability results.

TotalProb: Sum of the probability results.

(See

“Two one-sided t-tests” for more details.)

Power_TOST: Power of the two one-sided t-tests. (See

“Power of the two one-sided t-tests proce-

dure”

for more details.)

AHpval: Anderson-Hauck test statistic. (See

“Anderson-Hauck test” for more details.)

Power_80_20: Power to detect a difference in least square means equal to 20% of the reference

LSM. (See

“Power for 80/20 Rule” for more details.)

Prob_Eq_Var: Probability of equal variance. (See

“Tests for equal variances” for more details.)

• Ratios Test: Ratios of reference to test mean computed. One worksheet for each test formula-

tion.

• User Settings: User-specified settings

For convenience, the Linear Mixed Effects Modeling output is also included with Average Bioequiva-

lence, but note that, since there is no specification of test or reference in Linear Mixed Effects Model-

ing, the LSM differences are computed alphabetically, which could correspond to reference minus test

formulation rather than test minus reference.

Phoenix WinNonlin

User’s Guide

14

• Diagnostics: Number of observations, number of observations used, residual sums of squares,

residual degrees of freedom, residual variance, restricted log likelihood, AIC, SBC, and Hessian

eigenvalues

• Final Fixed Parameters: Sort variables, dependent variable(s), units, effect level, parameter esti-

mate, t-statistic, p-value, intervals, etc.

• Final Variance Parameters: Final estimates of the variance parameters

• Initial Variance Parameters: Parameter and estimates for variance parameters

• Iteration History: Iteration, Obj_function, and column for each variance parameter

• Least Squares Means: Sort variable(s), dependent variable(s), units, and the least squares

means for the test formulation

• LSM Differences: Difference in least squares means

• Parameter Key: Variance parameter names assigned by Phoenix

• Partial SS: Only for 2x2 crossover designs using the default model: ANOVA sums of squares,

degrees of freedom, mean squares, F-tests and p-values for partial test

• Partial Tests: Sort Variable(s), Dependent Variable(s), Units, Hypothesis, Hypothesis degrees of

freedom, Denominator degrees of freedom, F statistic, and p-value

• Residuals: Sort Variable(s), Dependent Variable(s), Units, and information on residual effects vs.

predicted and observed effects

• Sequential SS: Only for 2x2 crossover designs using the default model: ANOVA sums of

squares, degrees of freedom, mean squares, F-tests and p-values for sequential test

• Sequential Tests: Sort Variable(s), Dependent Variable(s), Units, Hypothesis, Hypothesis

degrees of freedom, Denominator degrees of freedom, F statistic, and p-value

Population/Individual bioequivalence output

The worksheets that are created depend on which model options are selected.

• Population Individual: Output from the bioequivalence analysis. Columns include:

Dependent: Input data column mapped to the Dependent context.

Units: Units, if specified in the input dataset.

Statistic: Name of the statistic.

Value: Computed value of the statistic.

Upper_CI: Upper bound (an upper bound < 0 indicates bioequivalence).

Conclusion: Bioequivalence conclusion.

• Ratios Test: Ratios of reference to test mean. One worksheet for each test formulation.

• User Settings: User-specified settings

The population/individual bioequivalence statistics include:

Difference (Delta): Difference in sample means between the test and reference formulations.

Ratio(%Ref): Ratio of the test to reference means expressed as a percent. This is compared with

the percent of reference to detect that was specified by the user, to determine if bioequivalence

has been shown. The ratio is expected to be 100% if both formulations are exactly equal. If the

values of the two dependents are both over 100, it indicates that the test formulation resulted in

higher average exposure than the reference. Values <100 indicate the reverse. For example, if

the user specified a percent of reference to detect of 20%, and also specified a ln-transform, then

Ratio(%Ref) needs to be in the interval (80%, 125%) to show bioequivalence for the ratio test.

15

See “Least squares means” for more details.

SigmaR: value that is compared with sigmaP, the Total SD Standard, to determine whether mixed

scaling for population bioequivalence will use the constant-scaling values or the reference-scaling

values.

SigmaWR: value that is compared with sigmaI, the Within Subject SD Standard, to determine

whether mixed scaling for individual bioequivalence will use the constant-scaling values or the

reference-scaling values.

See “Bioequivalence criterion” for more details.

Com

puted bioequivalence statistics include:

Ref_Pop_eta: Test statistic for population bioequivalence with reference scaling

Const_Pop_eta: Test statistic for population bioequivalence with constant scaling

Mixed_Pop_eta: Test statistic for population bioequivalence with mixed scaling

Ref_Indiv_eta: Test statistic for individual bioequivalence with reference scaling

Const_Indiv_eta: Test statistic for individual bioequivalence with constant scaling

Mixed_Indiv_eta: Test statistic for individual bioequivalence with mixed scaling

Because population/individual bioequivalence only allows crossover designs, the output also contains

the ratios test, described under “Ratios test”.

Text output

The Bioequivalence object creates two types of text output: a Settings file that contains model set-

tings, and an optional Core Output file. The Core Output text file is created if the Core Output check-

box is selected in the General Options tab, then the Core Output text file is created. The file contains

a complete summary of the analysis, including all output as well as analysis settings and any errors

that occur.

Ratios test

ln_transform: test

X

i

ln

N

---------------

i

exp=

log10_transform: test 10

N

1–

10

X

i

log

i

=

For any bioequivalence study done using a crossover design, the output also includes for each test

formulation a table of differences and ratios of the original data computed individually for each sub-

ject.

Let

test be the value of the dependent variable measured after administration of the test formulation

for one subject. Let

ref be the corresponding value for the reference formulation, for the case in which

only one dependent variable measurement is made. If multiple test or reference values exist for the

same subject, then let

test be the mean of the values of the dependent variable measured after

administration of the test formulation, and similarly for

ref, except for the cases of ‘ln-transform’ and

‘log10-transform’ where the geometric mean should be used:

where

X

i

are the measurements after administration of the test formulation, and similarly for ref. For

each subject, for the cases of no transform, ln-transform, or log10-transform, the ratios table contains:

Phoenix WinNonlin

User’s Guide

16

Difference=test – ref

Ratio(%Ref)=100*test/ref

For data that was specified to be ‘already ln-transformed,’ these values are back-transformed to be in

terms of the original data:

Difference=exp(test) – exp(ref)

Ratio(%Ref)=100*exp(test – ref)=100*exp(test)/exp(ref)

Similarly, for data that was specified to be ‘already log10-transformed’:

Difference=10

test

– 10

ref

Ratio(%Ref)=100*10

test

– ref=100*10

test

/10

ref

Note: For ‘already transformed’ input data, if the mean is used for test or ref, and the antilog of test or

ref is taken above, then this is equal to the geometric mean.

17

Bioequivalence overview

Bioequivalence is said to exist when a test formulation has a bioavailability that is similar to that of

the reference. There are three types of bioequivalence: Average, Individual, and Population. Average

bioequivalence states that average bioavailability for test formulation is the same as for the reference

formulation. Population bioequivalence is meant to assess equivalence in prescribability and takes

into account both differences in mean bioavailability and in variability of bioavailability. Individual bio-

equivalence is meant to assess switchability of products, and is similar to population bioequivalence.

The US FDA Guidance for Industry (January 2001) recommends that a standard in vivo bioequiva-

lence study design be based on the administration of either single or multiple doses of the test and

reference products to healthy subjects on separate occasions, with random assignment to the two

possible sequences of drug product administration. Further, the 2001 guidance recommends that sta-

tistical analysis for pharmacokinetic parameters, such as area under the curve (AUC) and peak con-

centration (Cmax) be based on a test procedure termed the two one-sided tests procedure to

determine whether the average values for pharmacokinetic parameters were comparable for test and

reference formulations. This approach is termed average bioequivalence and involves the calculation

of a 90% confidence interval for the ratio of the averages of the test and reference products. To estab-

lish bioequivalence, the calculated interval should fall within a bioequivalence limit, usually 80–125%

for the ratio of the product averages. In addition to specifying this general approach, the 2001 guid-

ance also provides specific recommendations for (1) logarithmic transformations of pharmacokinetic

data, (2) methods to evaluate sequence effects, and (3) methods to evaluate outlier data.

It is also recommended that average bioequivalence be supplemented by population and individual

bioequivalence. Population and individual bioequivalence include comparisons of both the averages

and variances of the study measure. Population bioequivalence assesses the total variability of the

measure in the population. Individual bioequivalence assesses within-subject variability for the test

and reference products as well as the subject-by-formulation interaction.

Bioequivalence studies

Bioequivalence between two formulations of a drug product, sometimes referred to as relative bio-

availability, indicates that the two formulations are therapeutically equivalent and will provide the

same therapeutic effect. The objectives of a bioequivalence study is to determine whether bioequiva-

lence exists between a test formulation and reference formulation and to identify whether the two for-

mulations are pharmaceutical alternatives that can be used interchangeably to achieve the same

effect.

Bioequivalence studies are important because establishing bioequivalence with an already approved

drug product is a cost-efficient way of obtaining drug approval. Bioequivalence studies are also useful

for testing new formulations of a drug product, new routes of administration of a drug product, and for

a drug product that has changed after approval.

There are three different types of bioequivalence: average bioequivalence, population bioequiva-

lence, and individual bioequivalence. All types of bioequivalence comparisons are based on the cal-

culation of a criterion (or point estimate), on the calculation of an interval for the criterion, and on a

predetermined acceptability limit for the interval.

A procedure for establishing average bioequivalence involves administration of either single or multi-

ple doses of the test and reference formulations to subjects, with random assignment to the possible

sequences of drug administration. Then a statistical analysis of pharmacokinetic parameters such as

AUC and Cmax is done on a log-transformed scale to determine the difference in the average values

of these parameters between the test and reference data, and to determine the interval for the differ-

ence. To establish bioequivalence, the interval, usually calculated at the 90% level, should fall within a

predetermined acceptability limit, usually within 20% of the reference average. An equivalent

approach using two, one-sided t-tests is also recommended. Both the interval approach and the two

one-sided t-tests are described further below.

Phoenix WinNonlin

User’s Guide

18

The average bioequivalence should be supplemented by population and individual bioequivalences.

These added approaches are needed because average bioequivalence uses only a comparison of

averages, and not the variances, of the bioequivalence measures. In contrast, population and individ-

ual bioequivalence approaches include comparisons of both the averages and variances of the study

measure. The population bioequivalence approach assesses the total variability of the study measure

in the population. The individual bioequivalence approach assesses within-subject variability for the

test and reference products as well as the subject-by-formulation interaction.

The concepts of population and individual bioequivalence are related to two types of drug inter-

changeability: prescribability and switchability. Drug prescribability refers to when a physician pre-

scribes a drug product to a patient for the first time, and must choose from a number of bioequivalent

drug products. Drug prescribability is usually assessed by population bioequivalence. Drug switch-

ability refers to when a physician switches a patient from one drug product to a bioequivalent product,

such as when switching from an innovator drug product to a generic substitute within the same

patient. Drug switchability is usually assessed by individual bioequivalence.

Covariance structure types

The covariance types used in the Bioequivalence model object are the same as those used in the Lin-

ear Mixed Effects model object. For more on variance structures see

“Variance structure” in the Lin-

Mix section. Covariance types must be specified if the model contains random effects.

The Variance Structure tab in the Bioequivalence object allows users to select the covariance type

used in the model. The variances of the random-effects parameters become the covariance parame-

ters for a bioequivalence model that contains both fixed effect and random effect parameters.

The choices for covariance structures are listed below. The list uses the following notation:

n: number of groups. n=1 if the group option is not used.

t: number of time points in the repeated context.

b: dimension parameter: for some variance structures, the number of bands; for others, the num-

ber of factors.

1(expression): 1 if the expression is true, 0 if the expression is false.

Variance Components have

n parameters; i

th

, j

th

element:

k

2

1(i = j)

i corresponds to k

th

effect

Unstructured has

n x t x (t + 1)/2 parameters; i

th

, j

th

element:

ij

in output,

ii

= un(i,j)

Banded Unstructured (b) has n x b/2(2t – b +1) parameters, if b < t; otherwise, same as Unstruc-

tured; i

th

, j

th

element:

ij

(|i – j| < n)

Compound Symmetry has

n x 2 parameters; i

th

, j

th

element:

1

+

1(i = j)

in output,

= csDiag,

i

= csBlock

Heterogeneous Compound Symmetry has n x (t + 1) parameters; i

th

, j

th

element:

in output,

i

= cshSD(i), = cshCorr

i

j

1 ij

1 ij=+

19

Autoregressive (1) has n x 2 parameters; i

th

, j

th

element:

i – j

|

in output,

2

= arVar, = arCorr

Heterogeneous Autoregressive (1) has n x (t + 1) parameters; i

th

, j

th

element:

i

j

i – j

|

in output,

i

= arhSD(i), = arhCorr

No-Diagonal Factor Analytic has n x t(t + 1)/2 parameters; i

th

, j

th

element:

in output,

ik

= lambda(i,k)

Banded No-Diagonal Factor Analytic (b) has n x b/2(2t – b + 1) parameters if b < t; otherwise,

same as No-Diagonal Factor Analytic; i

th

, j

th

element:

in output,

ik

= lambda(i,k)

Data limits and constraints

The limits and constraints discussed here are for Bioequivalence and Linear Mixed Effects modeling.

Cell data may not include question marks (?) or either single (') or double (“) quotation marks.

Variable names must begin with a letter. After the first character, valid characters include numbers

and underscores (my_file_2). They cannot include spaces or the following operational symbols: +, –,

*, /, =. They also cannot include parentheses (), question marks (?), semicolons (;), single or double

quotes.

Titles (in Contrasts, Estimates, or ASCII) can have single or double quotation marks, but not both.

The following are maximum counts for variables.

model terms 30

factors in a model term 10

sort keys 16

dependent variables 128

covariate/regressor variables 255

variables in the dataset 256

levels per variable 1,000

random and repeated statements 10

contrast statements 100

estimate statements 100

combined length of all variance parameter names (total characters) 10,000

ik

jk

k1=

min i j n

ik

jk

k1=

min i j b

Phoenix WinNonlin

User’s Guide

20

combined length of all level names (total characters) 10,000

combined length of input data line (total characters in line of data or column headers) 2,500

21

Average bioequivalence study designs

The most common designs for bioequivalence studies are replicated crossover, nonreplicated cross-

over, and parallel. In a parallel design, each subject receives only one formulation in randomized fash-

ion, whereas in a crossover design each subject receives different formulations in different time

periods. Crossover designs are further broken down into replicated and nonreplicated designs. In

nonreplicated designs, subjects receive only one dose of the test formulation and only one dose of the

reference formulation. Replicated designs involve multiple doses. A bioequivalence study should use

a crossover design unless a parallel or other design can be demonstrated to be more appropriate for

valid scientific reasons. Replicated crossover designs should be used for individual bioequivalence

studies, and can be used for average or population bioequivalence analysis.

An example of a nonreplicated crossover design is the standard 2x2 crossover design described

below.

Information about the following topics is available:

Recommended models for average bioequivalence

Least squares means

Classical intervals

Two one-sided t-tests

Power of the two one-sided t-tests procedure

Anderson-Hauck test

Power for 80

/20 Rule

Tests for equal variances

Recommended models for average bioequivalence

The default fixed effects, random effects, and repeated models for average bioequivalence studies

depends on the type of study design: replicated crossover, nonreplicated crossover, or parallel.

Replicated crossover designs

Replicated data is defined as data for which, for each formulation, there exists at least one subject

with more than one observation of that formulation. The default models depend on the type of analy-

ses and the main mappings. For replicated crossover designs, the default model used in the Bioequiv-

alence object is as follows:

Fixed effects model: Sequence + Formulation + Period

Random effects model: Subject(Sequence) and Type: Variance Components

Repeated specification: Period

Variance Blocking Variables: Subject

Group: Treatment

Type: Variance Components

Phoenix WinNonlin

User’s Guide

22

Nonreplicated crossover designs

Nonreplicated data is defined as data for which there exists at least one formulation where every sub-

ject has only one observation of that formulation. The default models depend on the type of analyses,

the main mappings, and a preference called Default for 2x2 crossover set to all fixed effects (set

under Edit > Preferences > LinMixBioequivalence). For nonreplicated crossover designs, the

default model is as follows.

Fixed effects model: Sequence + Formulation + Period

Unless the bioequivalence preference Default for 2x2 crossover set to all fixed effects is

turned on in the Preferences dialog (Edit > Preferences > LinMixBioequivalence), in which

case the model is Sequence + Subject(Sequence) + Formulation + Period.

Random effects model: Subject(Sequence) and Type: Variance Components

Unless the bioequivalence preference Default for 2x2 crossover set to all fixed effects is

turned on, in which case the model is not specified (the field is empty).

Repeated model is not specified.

Since there is no repeated specification, the default error model

~ N(0,

2

I) is used. This is equiva-

lent to the classical analysis method, but using maximum likelihood instead of method of moments to

estimate inter-subject variance. Using Subject as a random effect this way, the correct standard errors

will be computed for sequence means and tests of sequence effects. Using a fixed effect model, one

must construct pseudo-F tests by hand to accomplish the same task.

Note: If Warning 11094 occurs, “Negative final variance component. Consider omitting this VC struc-

ture.”, when Subject(Sequence) is used as a random effect, this most likely indicates that the

within-subject variance (residual) is greater than the between-subject variance, and a more appro-

priate model would be to move Subject(Sequence) from the random effects to the fixed effects

model, i.e., Sequence+Subject(Sequence)+Formulation + Period.

When this default model is used for a standard 2x2 crossover design, Phoenix creates two additional

worksheets in the output called Sequential SS and Partial SS, which contain the degrees of freedom

(DF), Sum of Squares (SS), Mean Squares (MS), F-statistic and p-value, for each of the model terms.

These tables are also included in the text output. Note that the F-statistic and p-value for the

Sequence term are using the correct error term since Subject(Sequence) is a random effect.

If the default model is used for 2x2 and the data is not transformed, the

intrasubject CV parameter is

added to the Final Variance Parameters worksheet:

intrasubject CV=sqrt(Var(Residual))/RefLSM

where

RefLSM is the Least Squares Mean of the reference treatment.

If the default model is used for 2x2 and the data is

either ln-transformed or log10-transformed, the

intra-su

bject CV and intrasubject CV parameters are added to the Final Variance Parameters

work

-sheet:

For ln-transformed data:

intersubject CV=sqrt(exp(Va r(Sequence*Subject)) – 1)

intrasubject CV=sqrt(exp(Residual) – 1)

For log10-transformed data:

intersubject CV=sqrt(10^(ln(10)*Var (Sequence*Subject)) – 1)

intrasubject CV=sqrt(10^(ln(10)*Var (Residual)) – 1)

23

Note that for this default model Var (Sequence*Subject) is the intersubject (between subject) vari-

ance, and

Residual is the intrasubject (within subject) variance.

Parallel designs

For parallel designs, whether data is replicated or nonreplicated, the default model is as follows.

Fixed effects model: Formulation

There is no random model and no repeated specification, so the residual error term is included in the

model.

Note: In each case, users can supplement or modify the model to suit the analysis needs of the dataset.

For example, if a Subject effect is appropriate for the Parallel option, as in a paired design (each

subject receives the same formulation initially and then the other formulation after a washout

period), set the Fixed Effects model to Subject+Formulation.

Note: When mapping a new input dataset to a Bioequivalence object, if the mappings are identical for

the new mapped dataset, then the model will remain the same. If there are any mapping changes

other than mapping an additional dependent variable, then the model will be rebuilt to the default

model since the existing model will not be valid anymore. This occurs for mapping changes either

made by the user or made automatically due to different column names in the dataset matching

the mapping context.

The user can reset to the default model at any time by making any mapping change, such as

unmapping and remapping a column.

Least squares means

In determining the bioequivalence of a test formulation and a reference formulation, the first step is

the computation of the least squares means (LSM) and standard errors of the test and reference for-

mulations and the standard error of the difference of the test and reference least squares means.

These quantities are computed by the same process that is used for the Linear Mixed Effects module.

See “Least squares means” in the LinMix section.

T

o simplify the notation for this and the following sections, let:

RefLSM: reference least squares mean,

TestLSM: test least squares mean,

fractionToDetect: (user-specified percent of reference to detect)/100,

DiffSE: standard error of the difference in LSM,

RatioSE: standard error of the ratio of the least squares means,

df: degrees of freedom for the difference in LSM.

The geometric LSM are computed for transformed data. For ln-transform or data already ln-trans-

formed,

RefGeoLSM = exp(RefLSM)

TestGeoLSM = exp(TestLSM)

For log10-transform or data already log10-transformed,

RefGeoLSM = 10

RefLSM

TestGeoLSM = 10

TestLSM

Phoenix WinNonlin

User’s Guide

24

The difference is the test LSM minus the reference LSM,

Difference = TestLSM – RefLSM

The ratio calculation depends on data transformation. For non-transformed,

For ln-transform or data already ln-transformed, the ratio is obtained on arithmetic scale by exponen-

tiating,

Ratio(%Ref) = 100exp(Difference)

Similarly for log10-transform or data already log10-transformed, the ratio is

Ratio(%Ref) = 100 x 10

Difference

Classical intervals

Output from the Bioequivalence module includes the classical intervals for confidence levels equal to

80, 90, 95, and for the confidence level that the user gave on the Options tab if that value is different

than 80, 90, or 95. To compute the intervals, first the following values are computed using the

students-t distribution, where 2*alpha=(100 – Confidence Level)/100, and Confidence Level is

specified in the user inter-face.

These values are included in the output for the no-transform case. These values are then transformed

if necessary to be on the arithmetic scale, and translated to percentages. For ln-transform or data

already ln-transformed,

CI_Lower = 100exp(Lower)

CI_Upper = 100exp(Upper)

For log10-transform or data already log10-transformed,

CI_Lower = 100 x 10

Lower

CI_Upper = 100 x 10

Upper

For no transform,

=

=

where the approximation

RatioSE=DiffSE/RefLSM is used. Similarly,

Ratio %Ref100

TestLSM

RefLSM

-----------------------

=

Lower t

1 – df(,)

DiffSE

Upper Difference t

1 – df(,)

DiffSE+

=

=

CI_Lower 100 1

Lower

RefLSM

---------------------

+

=

100 1

TestLSM RefLSM–

RefLSM

--------------------------------------------------

t

1 – df(,)

DiffSE

RefLSM

---------------------

–+

100

TestLSM

RefLSM

-----------------------

t

1 – df(,)

RatioSE–

25

Con

cluding whether bioequivalence is shown depends on the user-specified values for the level and

for the percent of reference to detect. These options are set on the Options tab in the Bioequivalence

object. To conclude whether bioequivalence has been achieved, the

CI_Lower and CI_Upper for

the user-specified value of the level are compared to the following lower and upper bounds. Note that

the upper bound for log10 or ln-transforms or for data already transformed is adjusted so that the

bounds are symmetric on a logarithmic scale.

LowerBound

= 100 – (percentReferenceToDetect)

UpperBound (ln-transforms)

= 100 exp(–ln(1 –

fractionToDetect))

= 100 (1/(1 –

fractionToDetect))

UpperBound (log10-transforms)

= 100*10^(–log10(1 –

fractionToDetect))

= 100 (1/(1 –

fractionToDetect))

UpperBound (no transform)

= 100+(

percentReferenceToDetect)

If the interval (

CI_Lower, CI_Upper) is contained within LowerBound and UpperBound, average

bioequivalence has been shown. If the interval (

CI_Lower, CI_Upper) is completely outside the

interval (

LowerBound, UpperBound), average bioinequivalence has been shown. Otherwise, the

module has failed to show bioequivalence or bioinequivalence.

Two one-sided t-tests

For ln-transform or data already ln-transformed, the first t-test is a left-tail test of the hypotheses:

H

0

: trueDifference < ln(1 – fractionToDetect) (bioinequivLeftTest)

H

1

: trueDifference ln(1 – fractionToDetect) (bioequivLeftTest)

The test statistic for performing this test is:

t

1

=((TestLSM – RefLSM) – ln(1 – fractionToDetect))/DiffSE

The p-value is determined using the t-distribution for this t-value and the degrees of freedom. If the p-

value is <0.05, then the user can reject

H

0

at the 5% level, i.e. less than a 5% chance of rejecting H

0

when it was actually true.

For log10-transform or data already log10-transformed, the first test is done similarly using log10

instead of ln.

For data with no transformation, the first test is (where Ratio here refers to the true ratio of the Test

mean to the Reference mean):

H

0

: Ratio < 1 – fractionToDetect

H

1

: Ratio 1 – fractionToDetect

The test statistic for performing this test is:

t

1

=[(TestLSM/RefLSM) – (1 – fractionToDetect)]/RatioSE

CI_Upper 100 1

Upper

RefLSM

---------------------

+

=

Phoenix WinNonlin

User’s Guide

26

where the approximation RatioSE=DiffSE/RefLSM is used.

The second t-test is a right-tail test that is a symmetric test to the first. However for log

10

or ln-trans-

forms, the test will be symmetric on a logarithmic scale. For example, if the percent of reference to

detect is 20%, then the left-tail test is Pr(<80%), but for ln-transformed data, the right-tail test is

Prob(>125%), since ln(0.8) = –ln(1.25).

For ln-transform or data already ln-transformed, the second test is a right-tail test of the hypotheses:

H

0

: trueDifference > –ln(1 – fractionToDetect) (bioinequivRightTest)

H

1

: trueDifference –ln(1 – fractionToDetect) (bioequivRightTest)

The test statistic for performing this test is:

t

2

=((TestLSM – RefLSM)+ln(1 – fractionToDetect))/DiffSE